Parallel Computing

Experimental html version of downloadable textbook, see https://theartofhpc.com

#1_1

vdots

#1_{#2-1} \end{pmatrix}} % {

left(

begin{array}{c} #1_0

#1_1

vdots

#1_{#2-1}

end{array}

right) } \] 2.1 : Introduction

2.1.1 : Functional parallelism versus data parallelism

2.1.2 : Parallelism in the algorithm versus in the code

2.2 : Theoretical concepts

2.2.1 : Definitions

2.2.1.1 : Speedup and efficiency

2.2.1.2 : Cost-optimality

2.2.2 : Asymptotics

2.2.3 : Amdahl's law

2.2.3.1 : Amdahl's law with communication overhead

2.2.3.2 : Gustafson's law

2.2.3.3 : Amdahl's law and hybrid programming

2.2.4 : Critical path and Brent's theorem

2.2.5 : Scalability

2.2.5.1 : Iso-efficiency

2.2.5.2 : Precisely what do you mean by scalable?

2.2.6 : Simulation scaling

2.2.7 : Other scaling measures

2.2.8 : Concurrency; asynchronous and distributed computing

2.3 : Parallel Computers Architectures

2.3.1 : SIMD

2.3.1.1 : Pipelining and pipeline processors

2.3.1.2 : True SIMD in CPUs and GPUs

2.3.2 : MIMD / SPMD computers

2.3.3 : The commoditization of supercomputers

2.4 : Different types of memory access

2.4.1 : Symmetric Multi-Processors: Uniform Memory Access

2.4.2 : Non-Uniform Memory Access

2.4.2.1 : Affinity

2.4.2.2 : Coherence

2.4.3 : Logically and physically distributed memory

2.5 : Granularity of parallelism

2.5.1 : Data parallelism

2.5.2 : Instruction-level parallelism

2.5.3 : Task-level parallelism

2.5.4 : Conveniently parallel computing

2.5.5 : Medium-grain data parallelism

2.5.6 : Task granularity

2.6 : Parallel programming

2.6.1 : Thread parallelism

2.6.1.1 : The fork-join mechanism

2.6.1.2 : Hardware support for threads

2.6.1.3 : Threads example

2.6.1.4 : Contexts

2.6.1.5 : Race conditions, thread safety, and atomic operations

2.6.1.6 : Memory models and sequential consistency

2.6.1.7 : Affinity

2.6.1.8 : Cilk Plus

2.6.1.9 : Hyperthreading versus multi-threading

2.6.2 : OpenMP

2.6.2.1 : OpenMP examples

2.6.3 : Distributed memory programming through message passing

2.6.3.1 : The global versus the local view in distributed programming

2.6.3.2 : Blocking and non-blocking communication

2.6.3.3 : The MPI library

2.6.3.4 : Blocking

2.6.3.5 : Collective operations

2.6.3.6 : Non-blocking communication

2.6.3.7 : MPI version 1 and 2 and 3

2.6.3.8 : One-sided communication

2.6.4 : Hybrid shared/distributed memory computing

2.6.5 : Parallel languages

2.6.5.1 : Discussion

2.6.5.2 : Unified Parallel C

2.6.5.3 : High Performance Fortran

2.6.5.4 : Co-array Fortran

2.6.5.5 : Chapel

2.6.5.6 : Fortress

2.6.5.7 : X10

2.6.5.8 : Linda

2.6.5.9 : The Global Arrays library

2.6.6 : OS-based approaches

2.6.7 : Active messages

2.6.8 : Bulk synchronous parallelism

2.6.9 : Data dependencies

2.6.9.1 : Types of data dependencies

2.6.9.2 : Parallelizing nested loops

2.6.10 : Program design for parallelism

2.6.10.1 : Parallel data structures

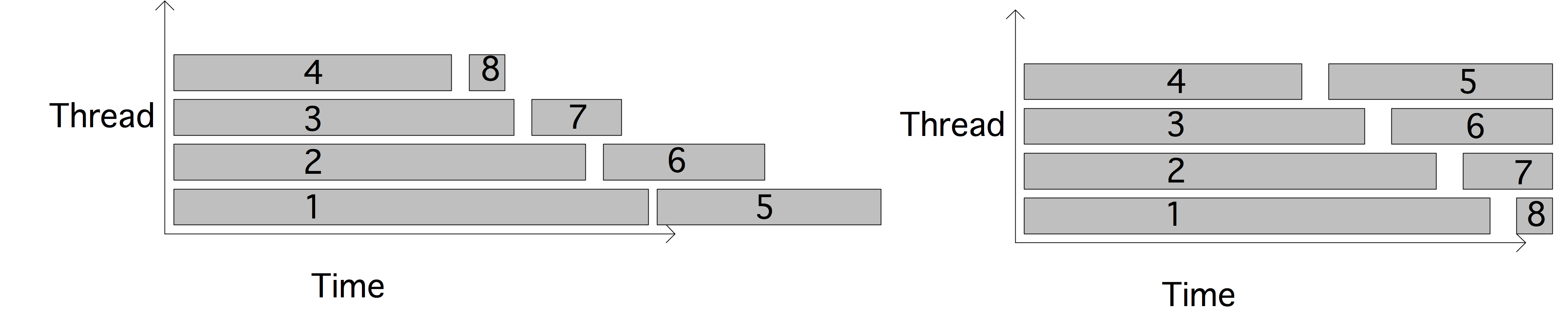

2.6.10.2 : Latency hiding

2.7 : Topologies

2.7.1 : Some graph theory

2.7.2 : Busses

2.7.3 : Linear arrays and rings

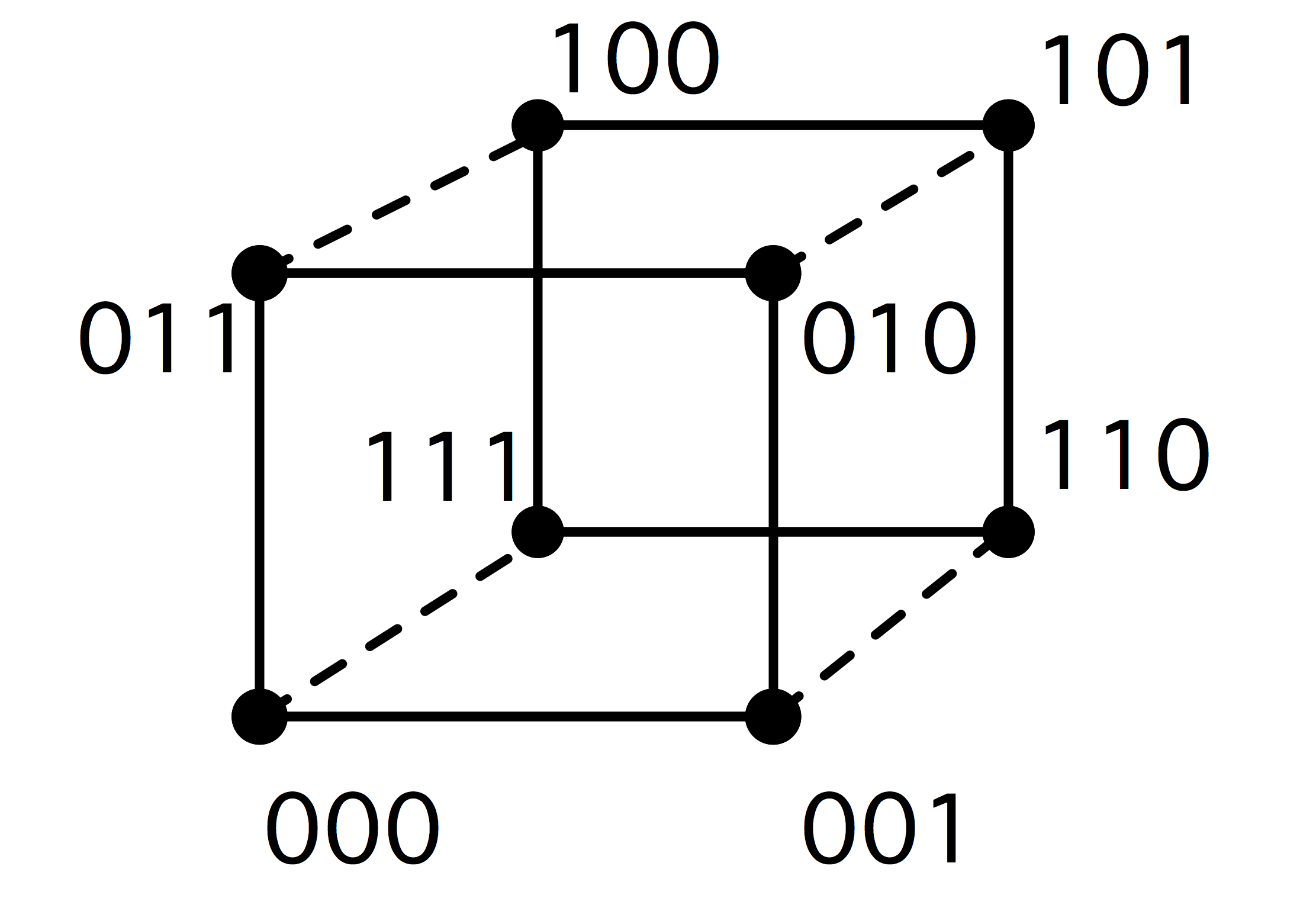

2.7.4 : 2D and 3D arrays

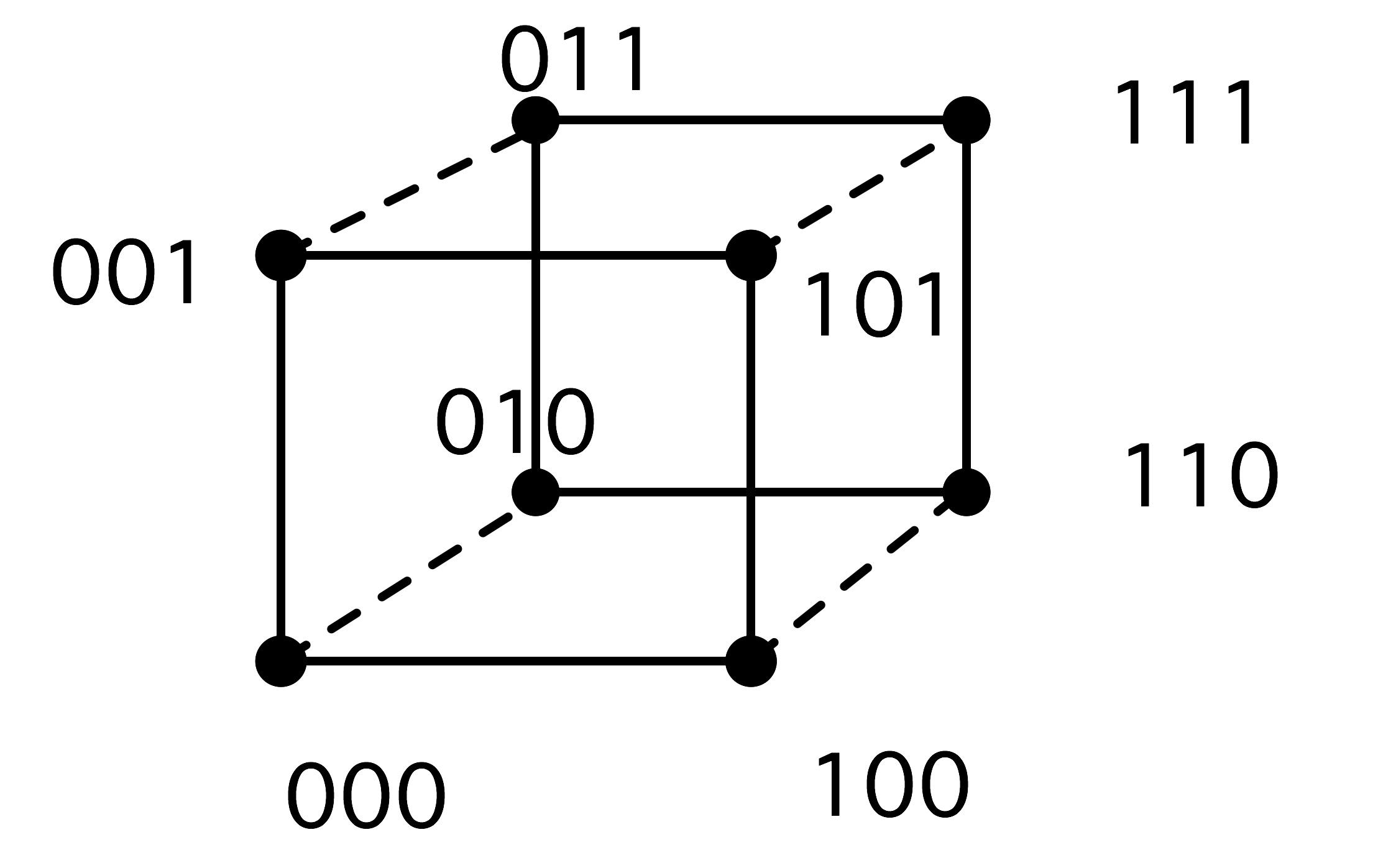

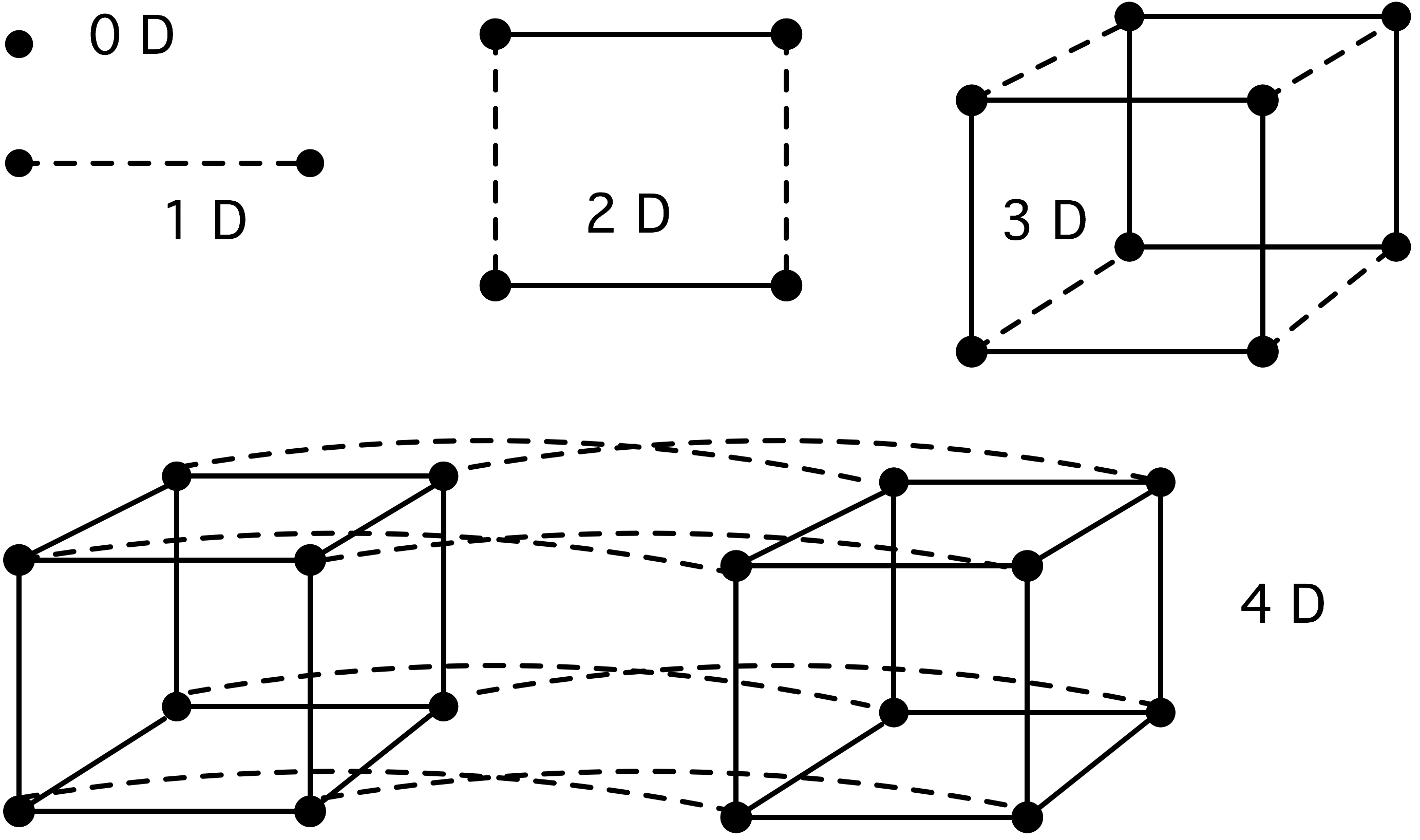

2.7.5 : Hypercubes

2.7.5.1 : Embedding grids in a hypercube

2.7.6 : Switched networks

2.7.6.1 : Cross bar

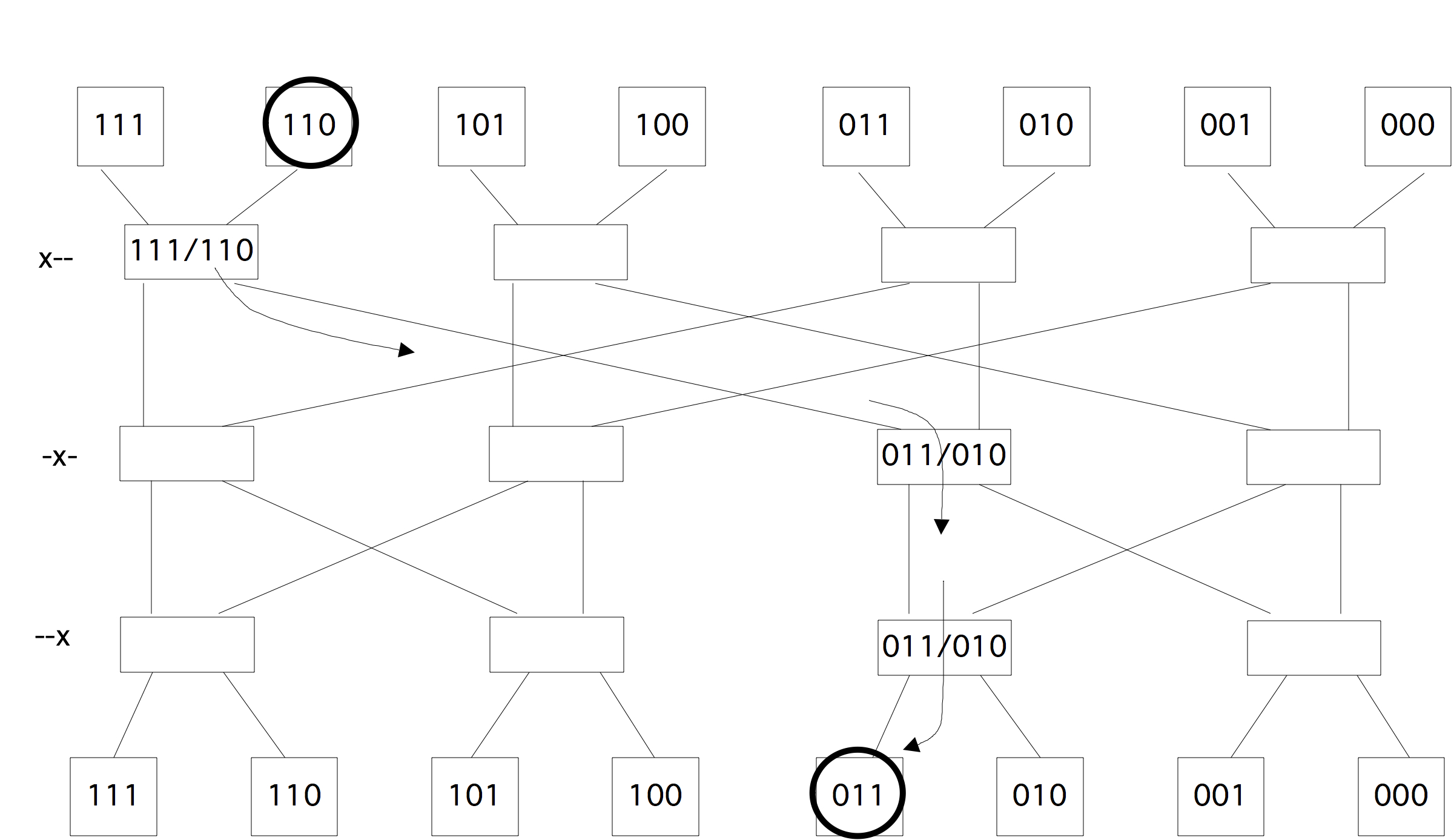

2.7.6.2 : Butterfly exchange

2.7.6.3 : Fat-trees

2.7.6.4 : Over-subscription and contention

2.7.7 : Cluster networks

2.7.7.1 : Case study: Stampede

2.7.7.2 : Case study: Cray Dragonfly networks

2.7.8 : Bandwidth and latency

2.7.9 : Locality in parallel computing

2.8 : Multi-threaded architectures

2.9 : Co-processors, including GPUs

2.9.1 : A little history

2.9.2 : Bottlenecks

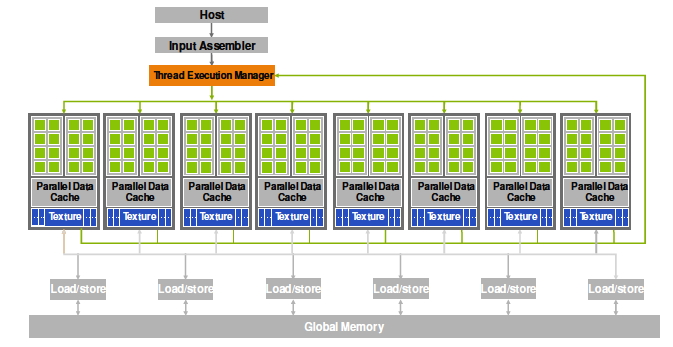

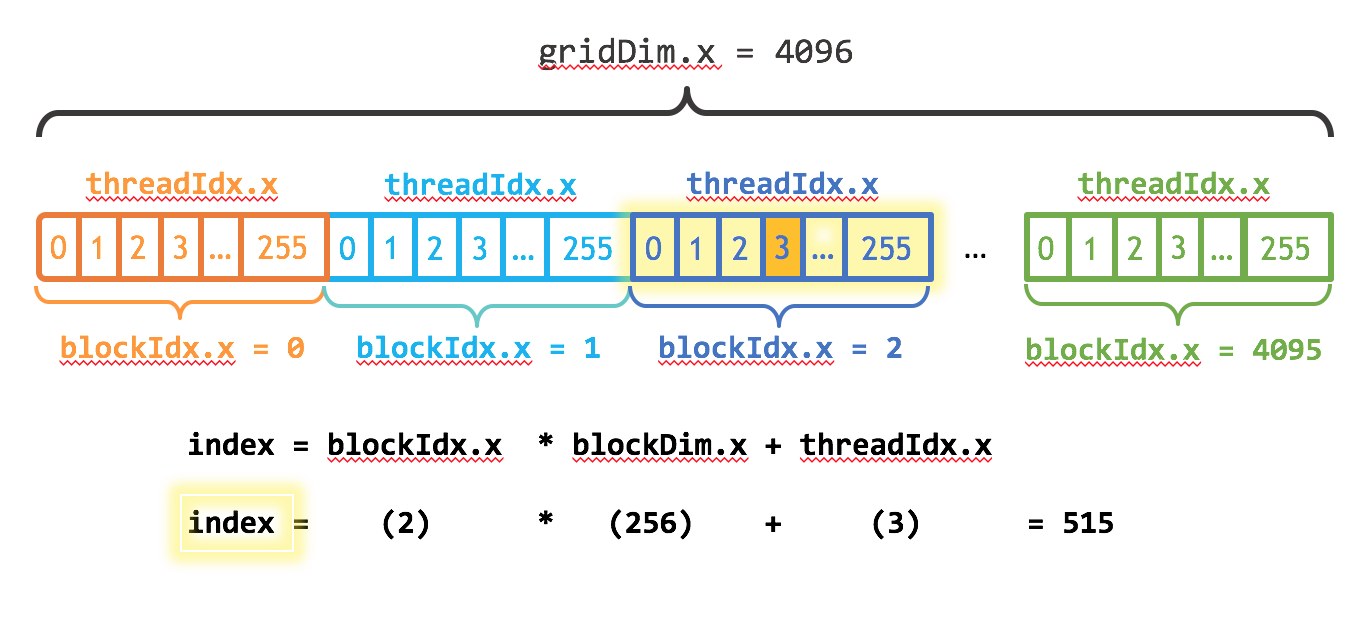

2.9.3 : GPU computing

2.9.3.1 : SIMD-type programming with kernels

2.9.3.2 : GPUs versus CPUs

2.9.3.3 : Expected benefit from GPUs

2.9.4 : Intel Xeon Phi

2.10 : Load balancing

2.10.1 : Load balancing versus data distribution

2.10.2 : Load scheduling

2.10.3 : Load balancing of independent tasks

2.10.4 : Load balancing as graph problem

2.10.5 : Load redistributing

2.10.5.1 : Diffusion load balancing

2.10.5.2 : Load balancing with space-filling curves

2.11 : Remaining topics

2.11.1 : Distributed computing, grid computing, cloud computing

2.11.2 : Usage scenarios

2.11.3 : Characterization

2.11.4 : Capability versus capacity computing

2.11.5 : MapReduce

2.11.5.1 : Expressive power of the MapReduce model

2.11.5.2 : Mapreduce software

2.11.5.3 : Implementation issues

2.11.5.4 : Functional programming

2.11.6 : The top500 list

2.11.6.1 : The top500 list as a recent history of supercomputing

2.11.7 : Heterogeneous computing

Back to Table of Contents

2 Parallel Computing

The largest and most powerful computers are sometimes called `supercomputers'. For the last two decades, this has, without exception, referred to parallel computers: machines with more than one CPU that can be set to work on the same problem.

Parallelism is hard to define precisely, since it can appear on several levels. In the previous chapter you already saw how inside a CPU several instructions can be `in flight' simultaneously. This is called instruction-level parallelism , and it is outside explicit user control: it derives from the compiler and the CPU deciding which instructions, out of a single instruction stream, can be processed simultaneously. At the other extreme is the sort of parallelism where more than one instruction stream is handled by multiple processors, often each on their own circuit board. This type of parallelism is typically explicitly scheduled by the user.

In this chapter, we will analyze this more explicit type of parallelism, the hardware that supports it, the programming that enables it, and the concepts that analyze it.

2.1 Introduction

crumb trail: > parallel > Introduction

In scientific codes, there is often a large amount of work to be done, and it is often regular to some extent, with the same operation being performed on many data. The question is then whether this work can be sped up by use of a parallel computer. If there are $n$ operations to be done, and they would take time $t$ on a single processor, can they be done in time $t/p$ on $p$ processors?

Let us start with a very simple example. Adding two vectors of length $n$

for (i=0; i<n; i++) a[i] = b[i] + c[i];can be done with up to $n$ processors. In the idealized case with $n$ processors, each processor has local scalars a,b,c and executes

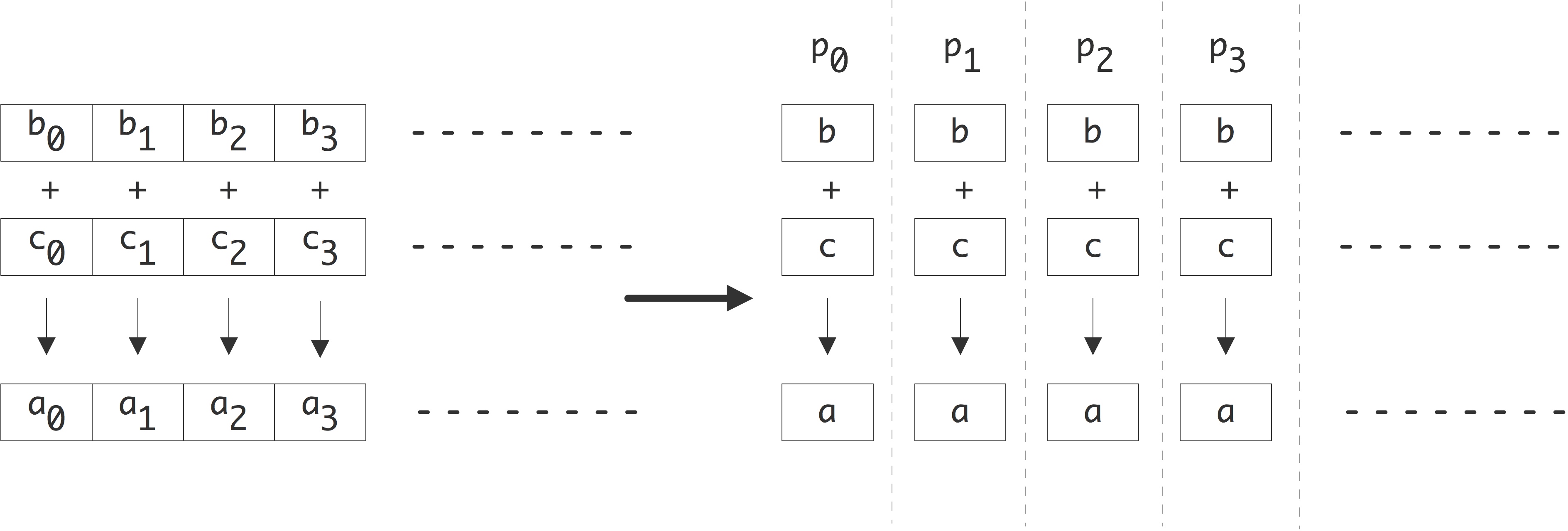

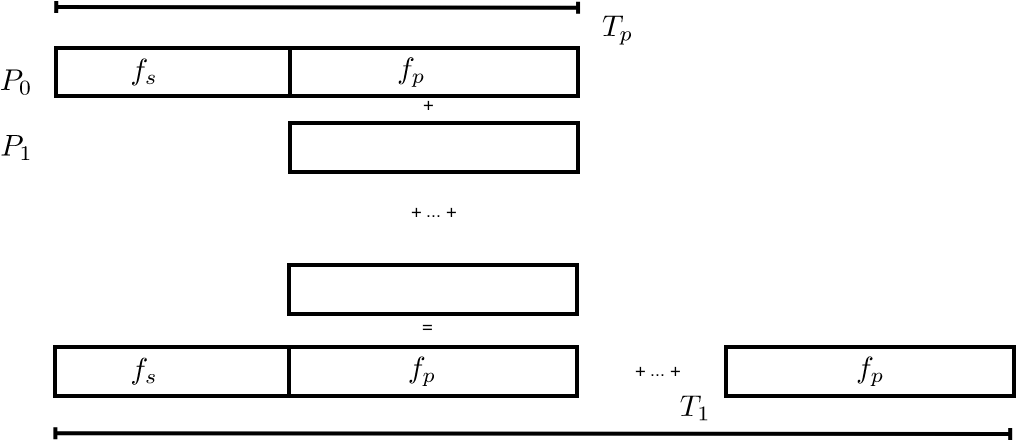

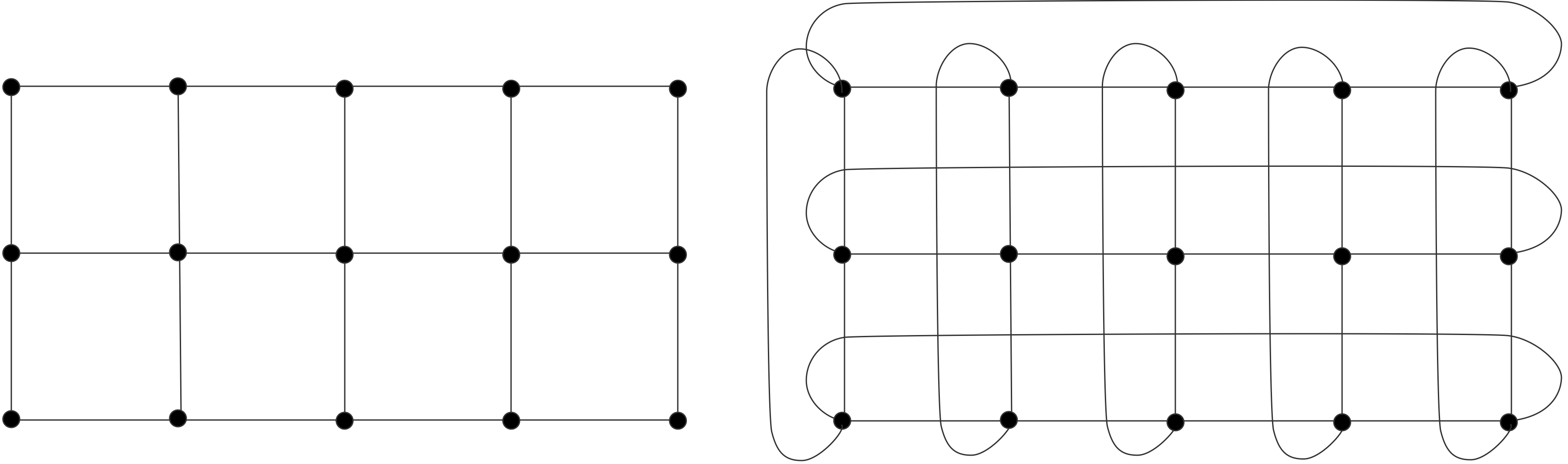

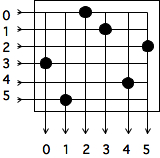

FIGURE 2.1: Parallelization of a vector addition.

the single instruction a=b+c . This is depicted in figure 2.1 .

In the general case, where each processor executes something like

for (i=my_low; i<my_high; i++) a[i] = b[i] + c[i];execution time is linearly reduced with the number of processors. If each operation takes a unit time, the original algorithm takes time $n$, and the parallel execution on $p$ processors $n/p$. The parallel algorithm is faster by a factor of $p$%

(Note: {Here we ignore lower order errors in this result when $p$ does not divide perfectly in $n$. We will also, in general, ignore matters of loop overhead.} )

.

Next, let us consider summing the elements of a vector. (An operation that has a vector as input but only a scalar as output is often called a reduction .) We again assume that each processor contains just a single array element. The sequential code:

s = 0; for (i=0; i<n; i++) s += x[i]is no longer obviously parallel, but if we recode the loop as

for (s=2; s<2*n; s*=2)

for (i=0; i<n-s/2; i+=s)

x[i] += x[i+s/2]

there is a way to parallelize it: every iteration of the outer loop is

now a loop that can be done by $n/s$ processors in parallel. Since the

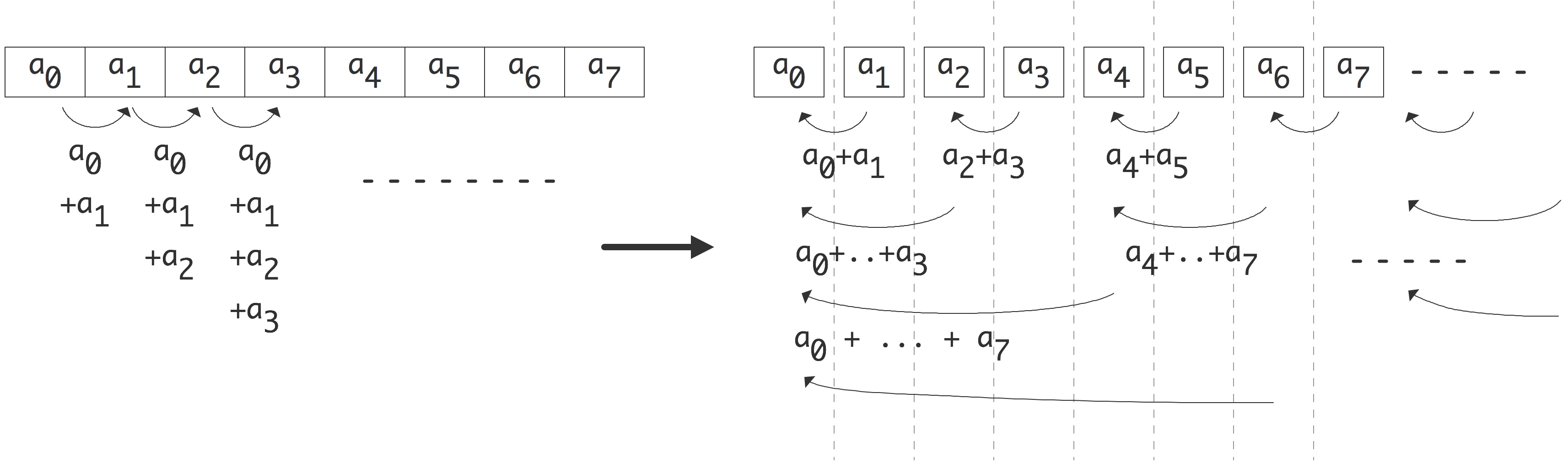

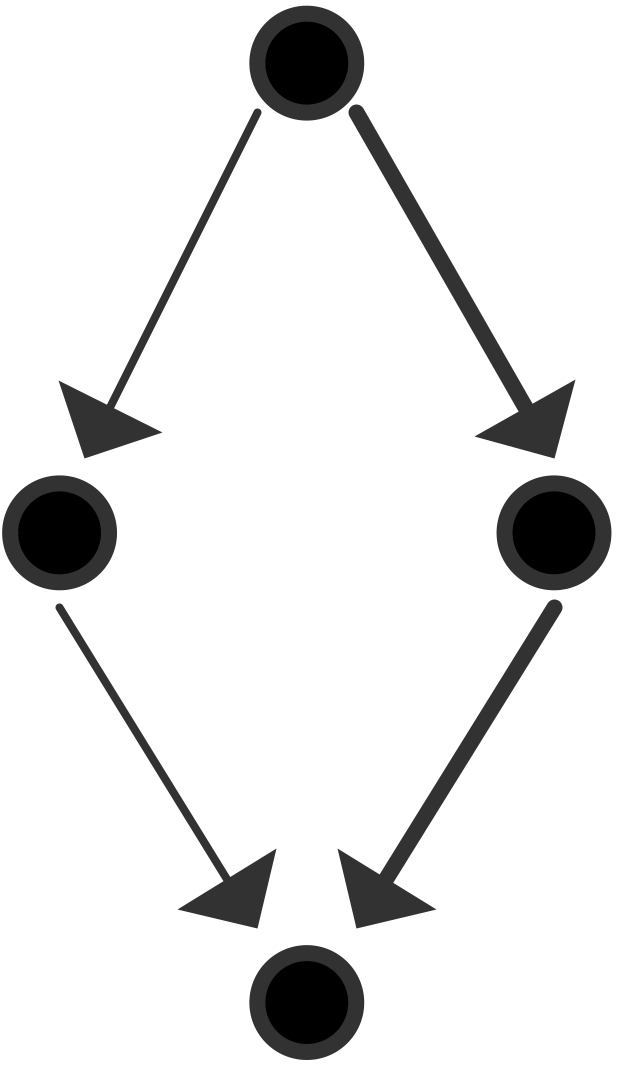

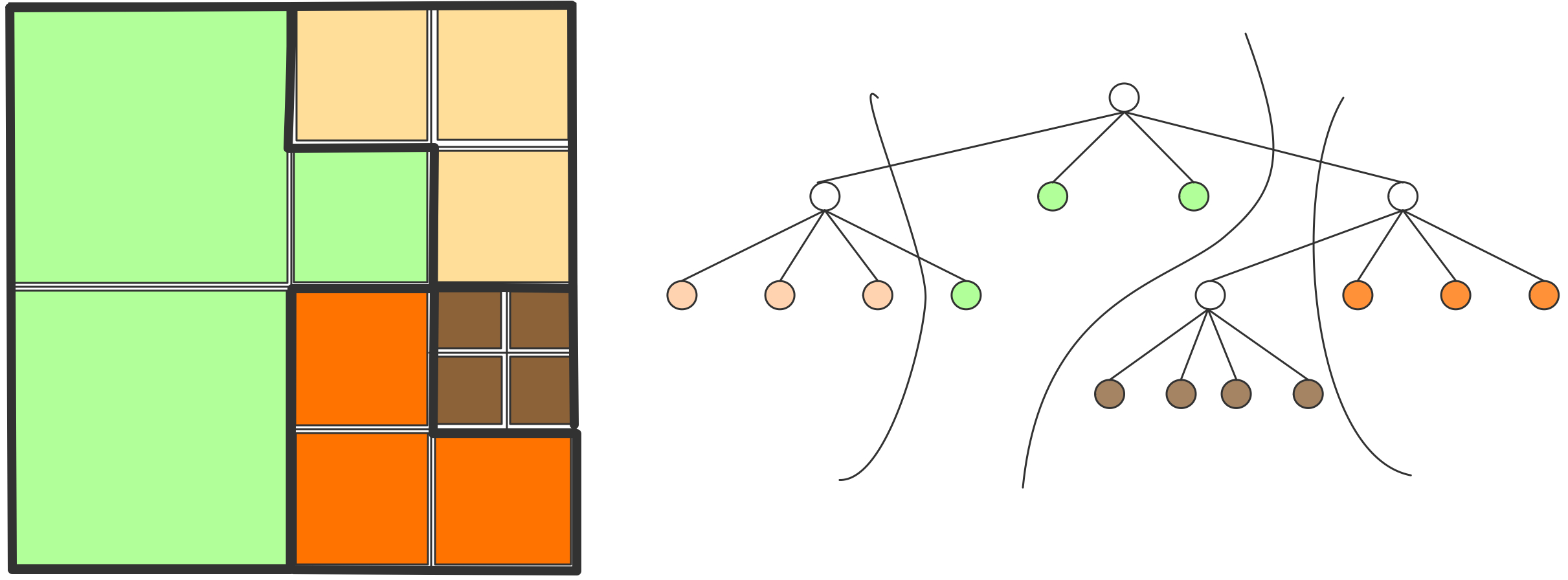

FIGURE 2.2: Parallelization of a vector reduction.

outer loop will go through $\log_2n$ iterations, we see that the new algorithm has a reduced runtime of $n/p\cdot\log_2 n$. The parallel algorithm is now faster by a factor of $p/\log_2n$. This is depicted in figure 2.2 .

Even from these two simple examples we can see some of the characteristics of parallel computing:

- Sometimes algorithms need to be rewritten slightly to make them parallel.

- A parallel algorithm may not show perfect speedup.

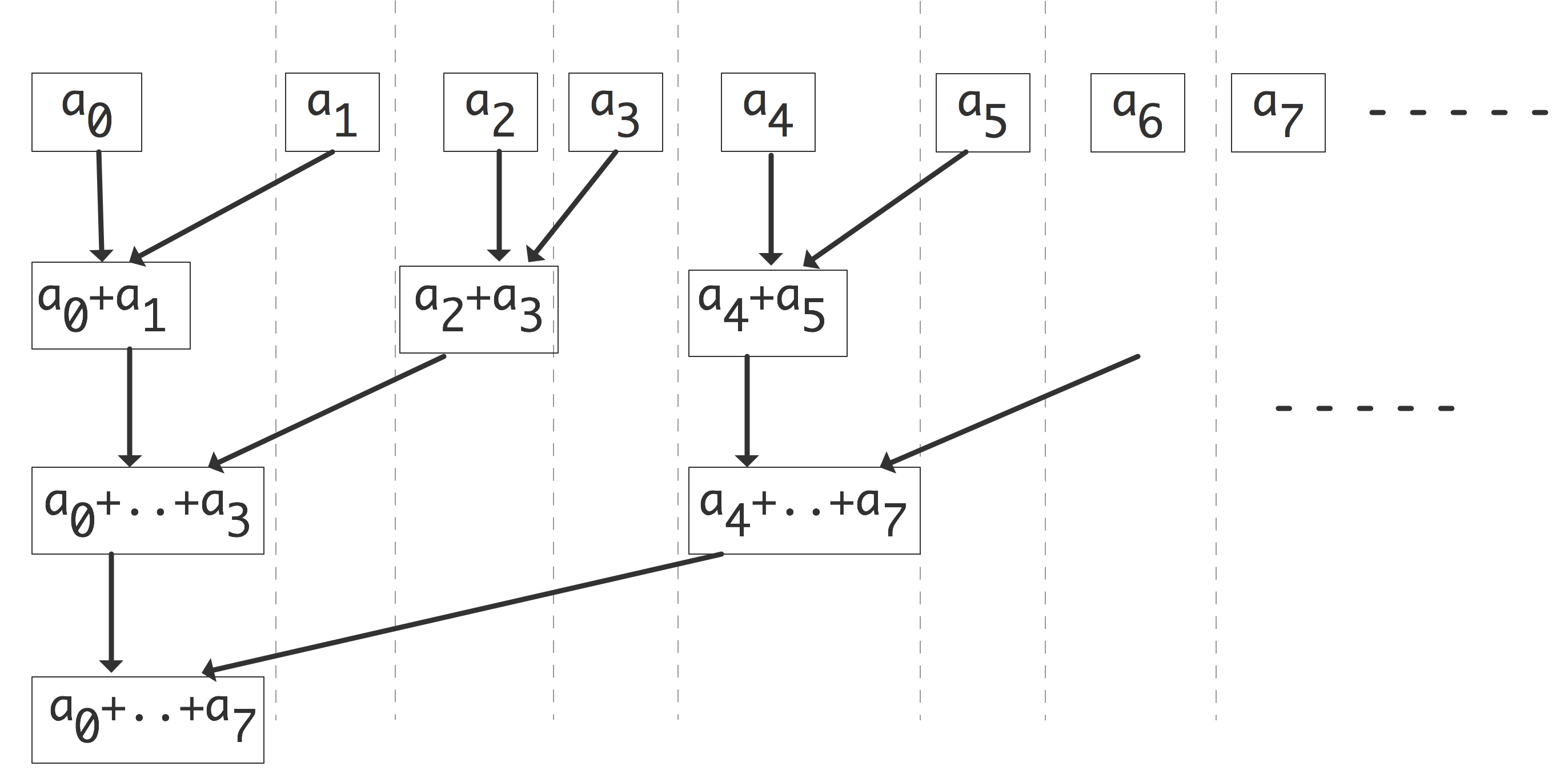

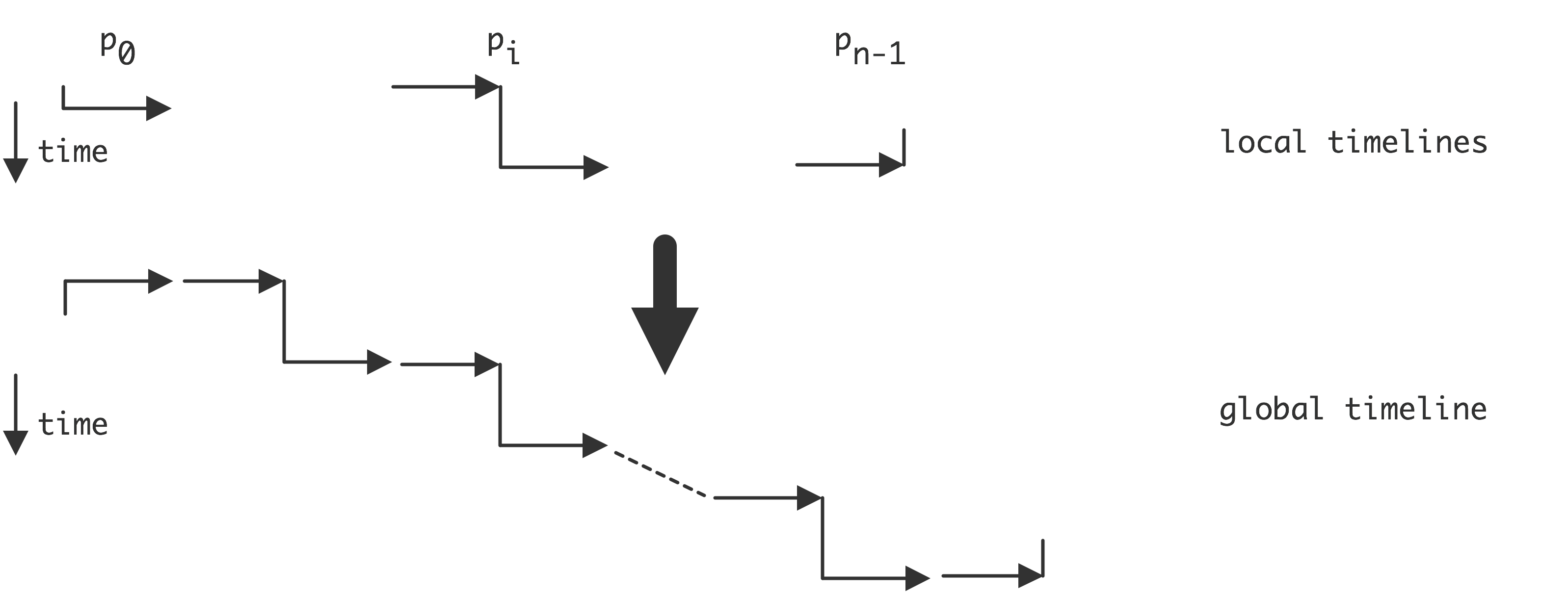

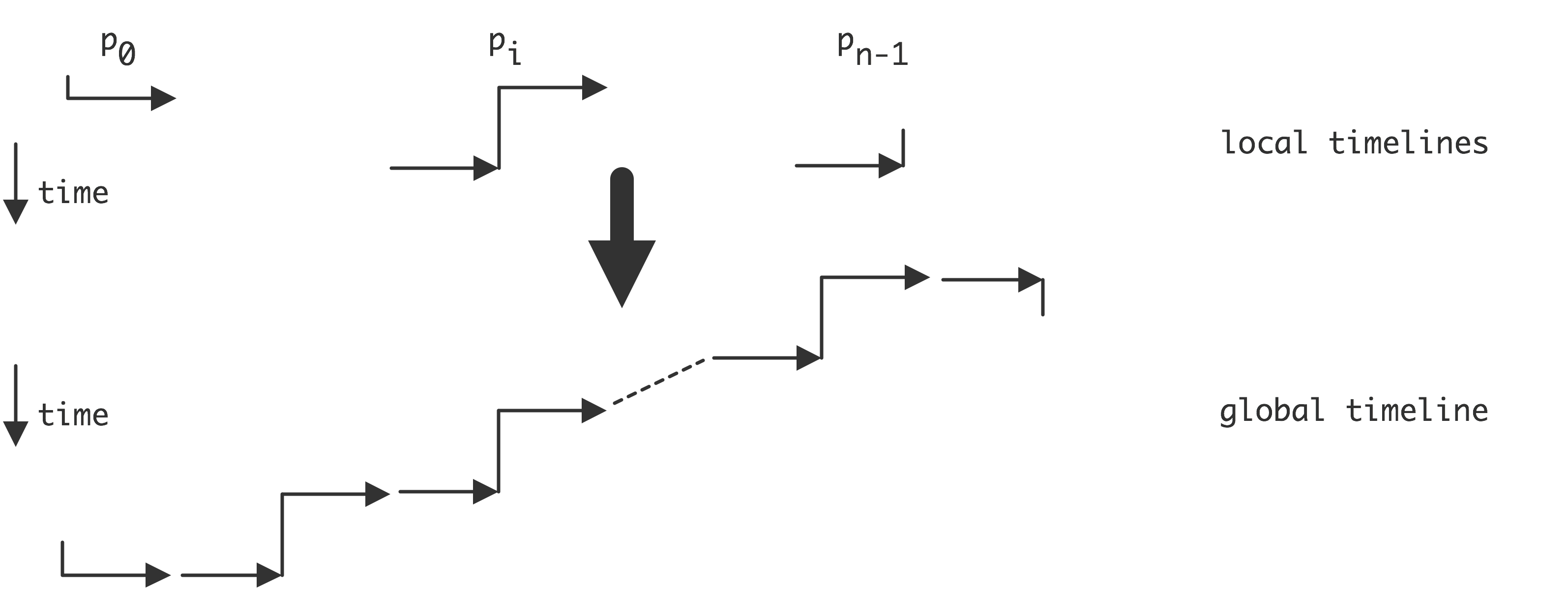

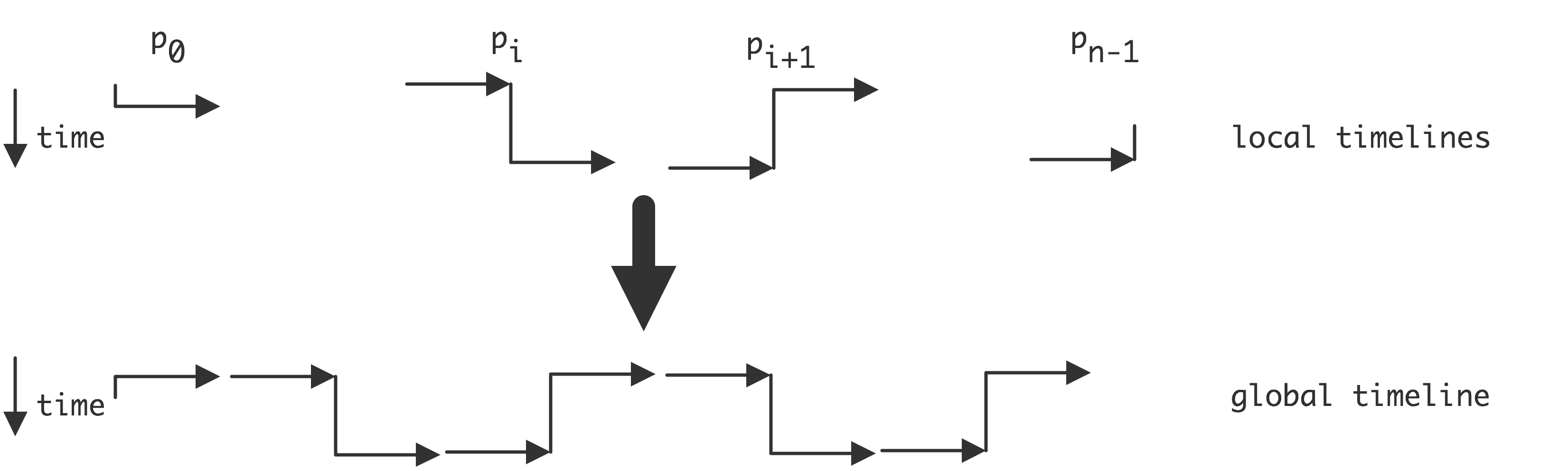

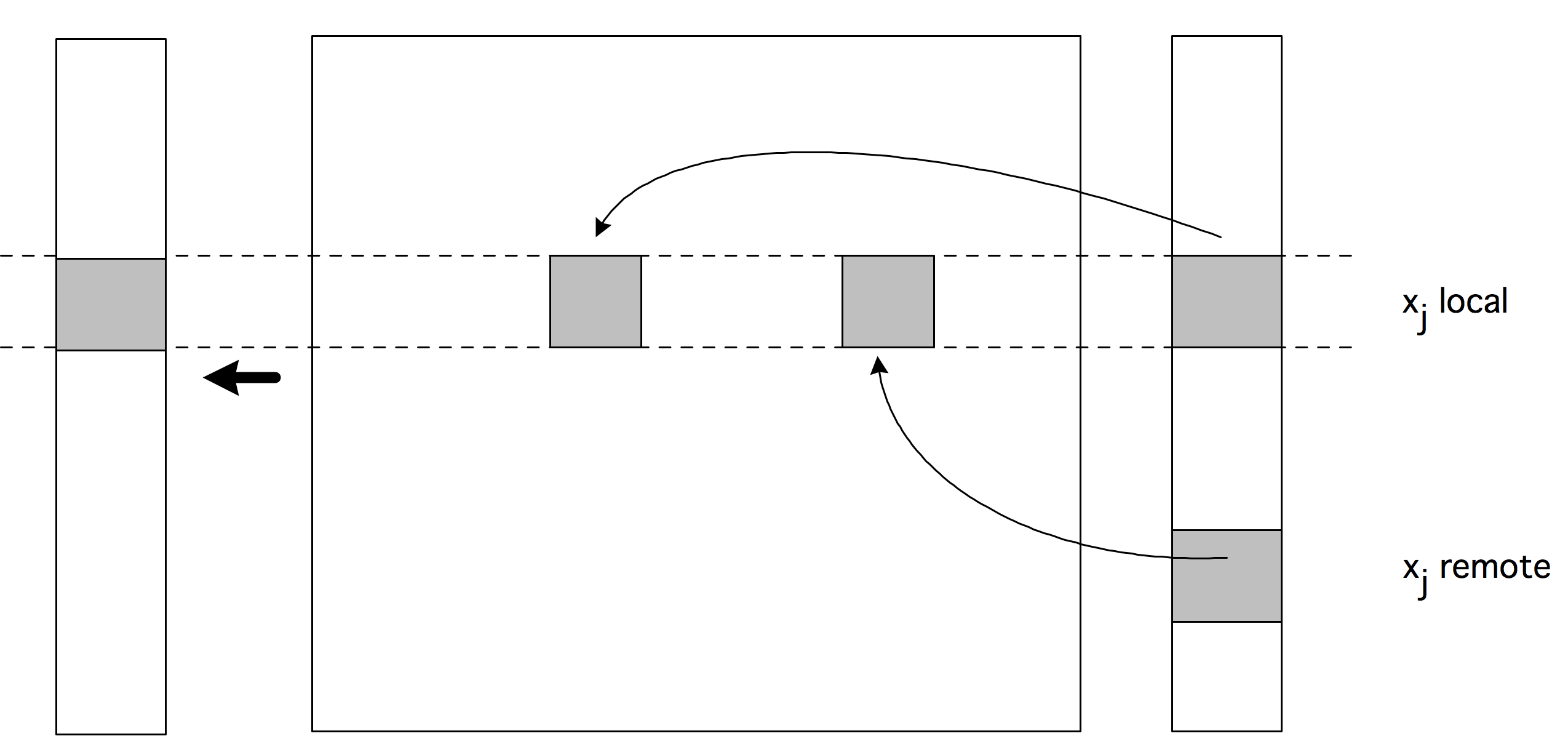

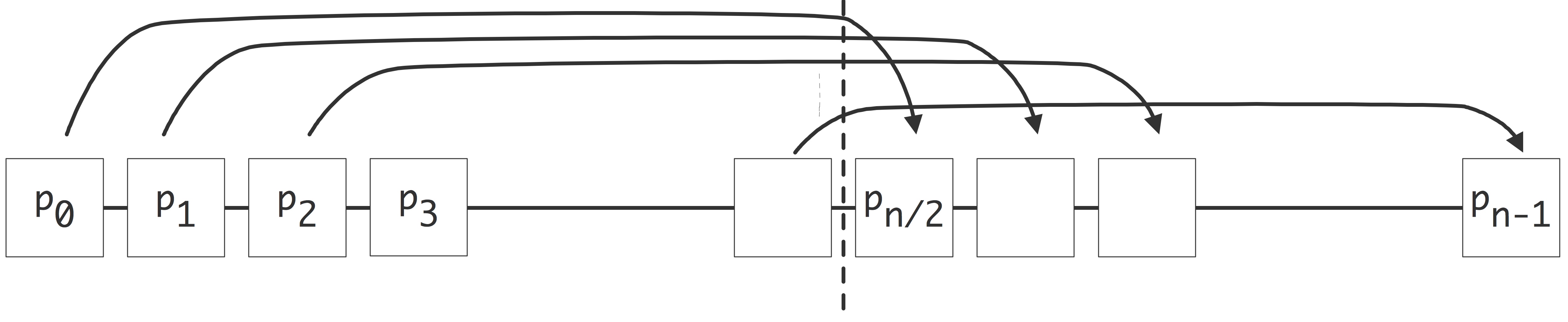

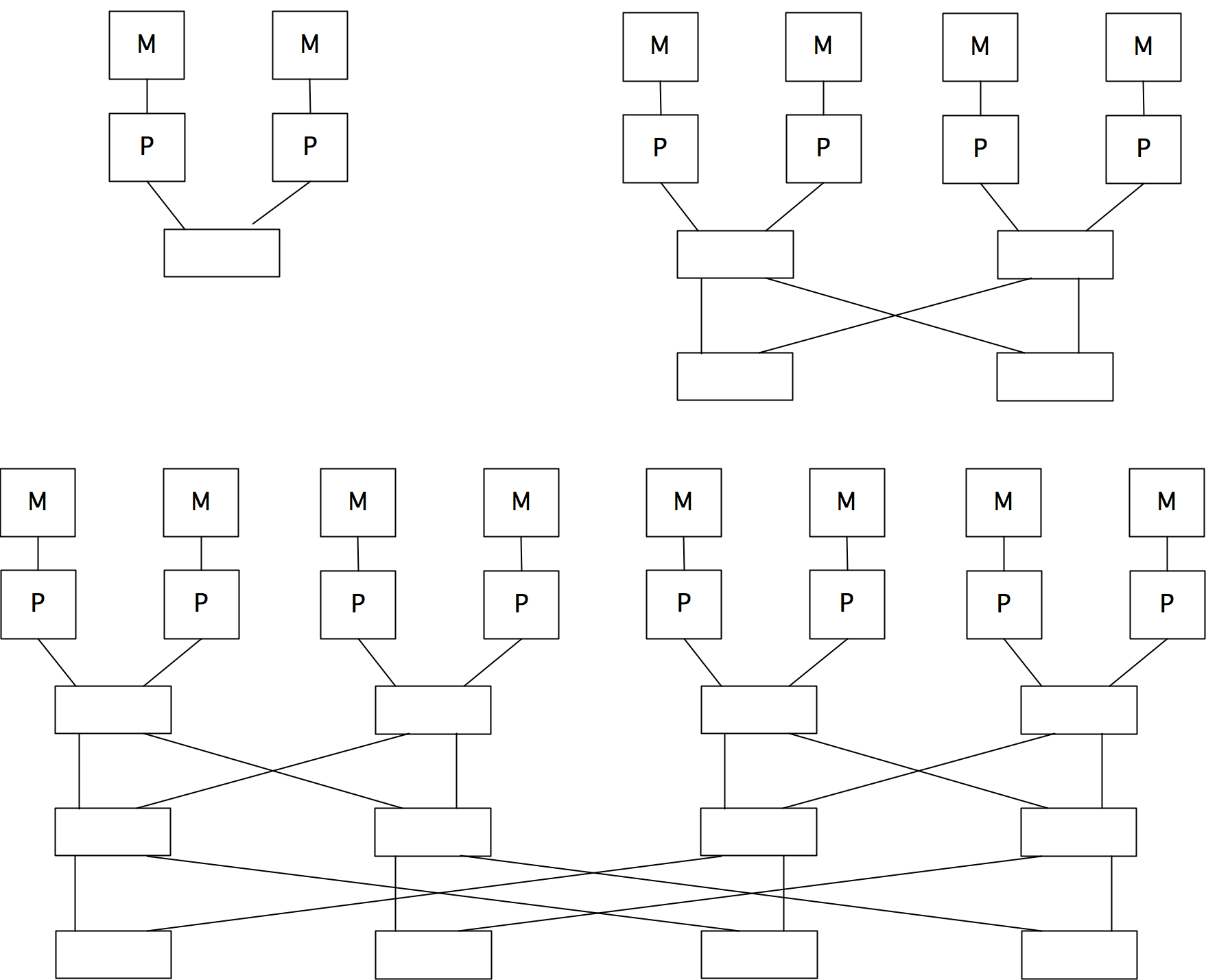

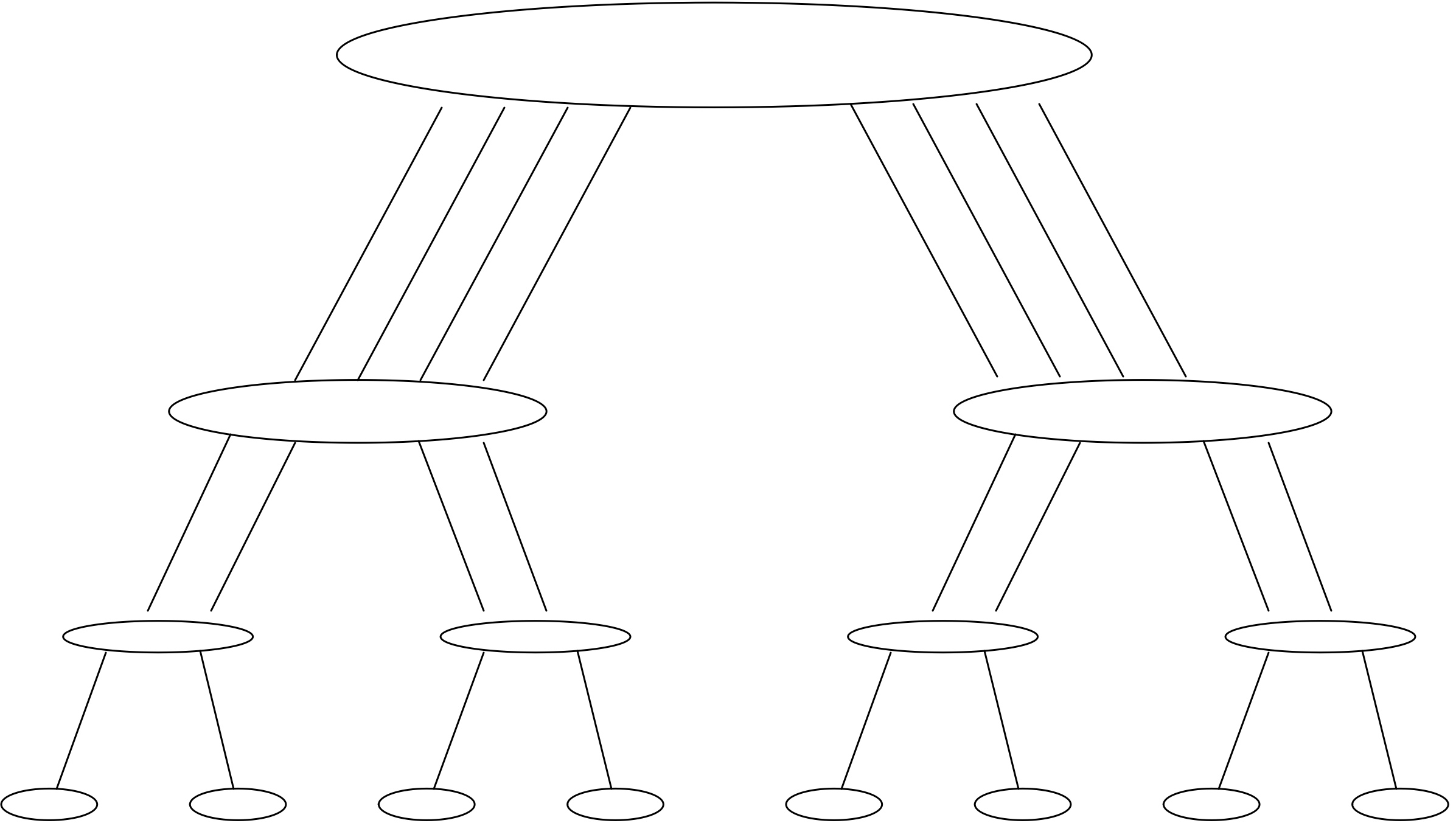

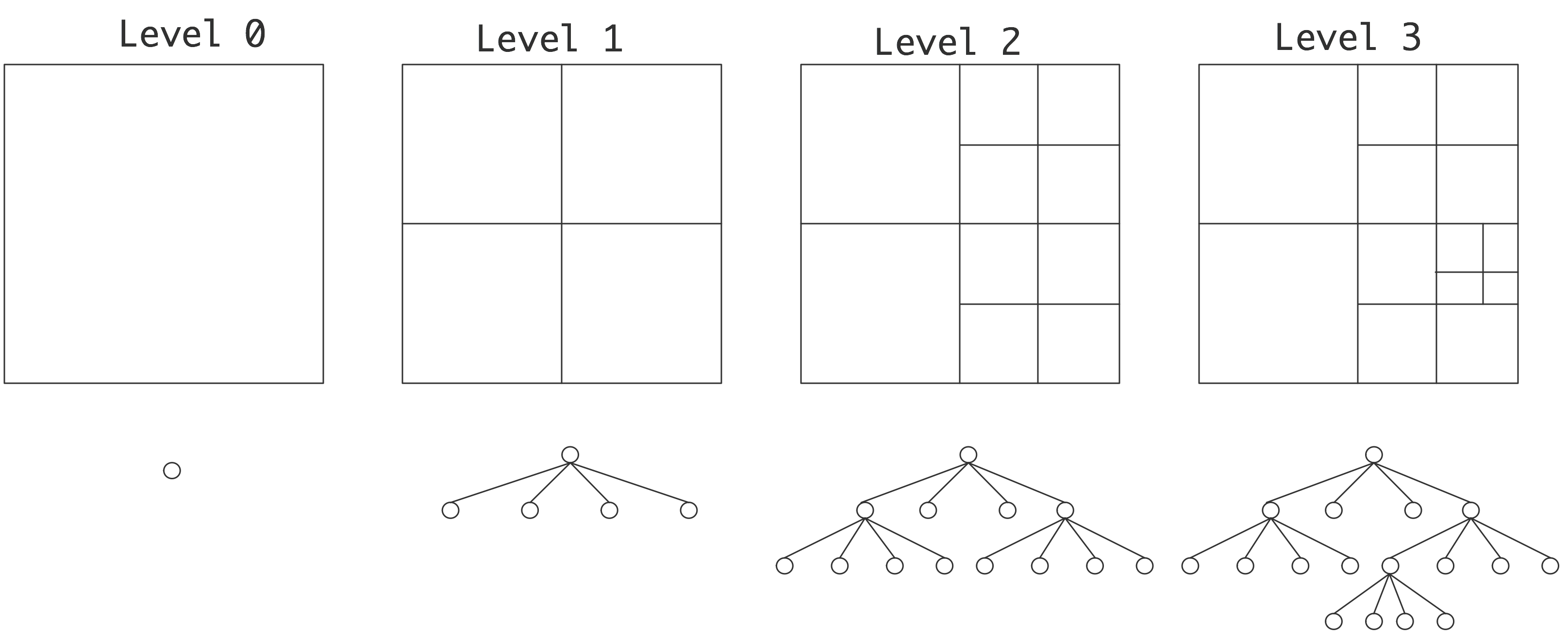

First let us look systematically at communication. We can take the parallel algorithm in the right half of figure 2.2 and turn it into a tree graph (see Appendix app:graph ) by defining the inputs as leave nodes, all partial sums as interior nodes, and the root as the total sum. There is an edge from one node to another if the first is input to the (partial) sum in the other. This is illustrated in figure 2.3 . In this figure nodes are horizontally aligned with other computations that can be performed simultaneously; each level is sometimes called a superstep in the computation. Nodes are vertically aligned if they are computed on the same processors, and an arrow corresponds to a communication if it goes from one processor to another.

FIGURE 2.3: Communication structure of a parallel vector reduction.

The vertical alignment in figure 2.3 is not the only one possible. If nodes are shuffled within a superstep or horizontal level, a different communication pattern arises.

Exercise

Consider placing the nodes within a superstep on random

processors. Show that, if no two nodes wind up on the same

processor, at most twice the number of communications is performed

from the case in figure

2.3

.

End of exercise

Exercise

Can you draw the graph of a computation that leaves the sum result

on each processor? There is a solution that takes twice the number

of supersteps, and there is one that takes the same number. In both

cases the graph is no longer a tree, but a more general

DAG

.

End of exercise

Processors are often connected through a network, and moving data through this network takes time. This introduces a concept of distance between the processors. In figure 2.3 , where the processors are linearly ordered, this is related to their rank in the ordering. If the network only connects a processor with its immediate neighbors, each iteration of the outer loop increases the distance over which communication takes place.

Exercise Assume that an addition takes a certain unit time, and that moving a number from one processor to another takes that same unit time. Show that the communication time equals the computation time.

Now assume that sending a number from processor $p$ to $p\pm k$

takes time $k$. Show that the execution time of the parallel

algorithm now is of the same order as the sequential time.

End of exercise

The summing example made the unrealistic assumption that every

processor initially stored just one vector element: in practice we will

have $p

Exercise

Consider the case of summing 8 elements with 4 processors. Show that

some of the edges in the graph of figure

2.3

no

longer correspond to actual communications.

Now consider summing 16 elements with, again, 4 processors. What is

the number of communication edges this time?

These matters of algorithm adaptation, efficiency, and communication,

are crucial to all of parallel computing. We will return to these

issues in various guises throughout this chapter.

crumb trail: > parallel > Introduction > Functional parallelism versus data parallelism

From the above introduction we can describe parallelism as finding

independent operations in the execution of a program. In all of the examples

these independent operations were in fact identical operations, but applied to

different data items. We could call this

data parallelism

: the same

operation is applied in parallel to many data elements.

This is in fact a common scenario in scientific computing: parallelism

often stems from the fact that a data set (vector, matrix,

graph,\ldots) is spread over many processors, each working on its part

of the data.

The term data parallelism is traditionally mostly applied

if the operation is a single instruction; in the case of a subprogram

it is often called

task parallelism

.

It is also possible to find independence, not based on data elements,

but based on the instructions themselves. Traditionally, compilers

analyze code in terms of

ILP

: independent instructions can be given

to separate floating point units, or reordered, for instance to optimize

register usage (see also section

2.5.2

).

ILP

is one case of

functional parallelism

;

on a higher level, functional parallelism can be obtained

by considering independent subprograms, often called

task parallelism

;

see section

2.5.3

.

Some examples of functional parallelism are Monte Carlo simulations,

and other algorithms that traverse a parametrized search space,

such as boolean

satisfyability

problems.

crumb trail: > parallel > Introduction > Parallelism in the algorithm versus in the code

Often we are in the situation that we want to parallelize an algorithm

that has a common expression in sequential form.

In some cases, this

sequential form is straightforward to parallelize, such as in the vector

addition discussed above. In other cases there is no simple way to

parallelize the algorithm; we will discuss linear recurrences in

section

6.9.2

. And in yet another case the sequential code may

look not parallel, but the algorithm actually has parallelism.

Exercise

End of exercise

2.1.1 Functional parallelism versus data parallelism

2.1.2 Parallelism in the algorithm versus in the code

for i in [1:N]:

x[0,i] = some_function_of(i)

x[i,0] = some_function_of(i)

for i in [1:N]:

for j in [1:N]:

x[i,j] = x[i-1,j]+x[i,j-1]

Answer the following questions about the double i,j loop:

- Are the iterations of the inner loop independent, that is, could they be executed simultaneously?

- Are the iterations of the outer loop independent?

- If x[1,1] is known, show that x[2,1] and x[1,2] can be computed independently.

- Does this give you an idea for a parallelization strategy?

End of exercise

We will discuss the solution to this conundrum in section 6.9.1 . In general, the whole of chapter High performance linear algebra will be about the amount of parallelism intrinsic in scientific computing algorithms.

2.2 Theoretical concepts

crumb trail: > parallel > Theoretical concepts

There are two important reasons for using a parallel computer: to have access to more memory or to obtain higher performance. It is easy to characterize the gain in memory, as the total memory is the sum of the individual memories. The speed of a parallel computer is harder to characterize. This section will have an extended discussion on theoretical measures for expressing and judging the gain in execution speed from going to a parallel architecture.

2.2.1 Definitions

crumb trail: > parallel > Theoretical concepts > Definitions

2.2.1.1 Speedup and efficiency

crumb trail: > parallel > Theoretical concepts > Definitions > Speedup and efficiency

A simple approach to defining speedup is to let the same program run on a single processor, and on a parallel machine with $p$ processors, and to compare runtimes. With $T_1$ the execution time on a single processor and $T_p$ the time on $p$ processors, we define the speedup as $S_p=T_1/T_p$. (Sometimes $T_1$ is defined as `the best time to solve the problem on a single processor', which allows for using a different algorithm on a single processor than in parallel.) In the ideal case, $T_p=T_1/p$, but in practice we don't expect to attain that, so $S_p\leq p$. To measure how far we are from the ideal speedup, we introduce the efficiency $E_p=S_p/p$. Clearly, $0< E_p\leq 1$.

There is a practical problem with the above definitions: a problem that can be solved on a parallel machine may be too large to fit on any single processor. Conversely, distributing a single processor problem over many processors may give a distorted picture since very little data will wind up on each processor. Below we will discuss more realistic measures of speed-up.

There are various reasons why the actual speed is less than $p$. For one, using more than one processor necessitates communication and synchronization, which is overhead that was not part of the original computation. Secondly, if the processors do not have exactly the same amount of work to do, they may be idle part of the time (this is known as load unbalance ), again lowering the actually attained speedup. Finally, code may have sections that are inherently sequential.

Communication between processors is an important source of a loss of efficiency. Clearly, a problem that can be solved without communication will be very efficient. Such problems, in effect consisting of a number of completely independent calculations, is called embarrassingly parallel (or conveniently parallel ; see section 2.5.4 ); it will have close to a perfect speedup and efficiency.

Exercise

The case of speedup larger than the number of processors is called

superlinear speedup

. Give a theoretical argument why

this can never happen.

End of exercise

In practice, superlinear speedup can happen. For instance, suppose a problem is too large to fit in memory, and a single processor can only solve it by swapping data to disc. If the same problem fits in the memory of two processors, the speedup may well be larger than $2$ since disc swapping no longer occurs. Having less, or more localized, data may also improve the cache behavior of a code.

A form of superlinear speedup can also happen in search algorithms. Imagine that each processor starts in a different location of the search space; now it can happen that, say, processor 3 immediately finds the solution. Sequentially, all the possibilities for processors 1 and 2 would have had to be traversed. The speedup is much greater than 3 in this case.

2.2.1.2 Cost-optimality

crumb trail: > parallel > Theoretical concepts > Definitions > Cost-optimality

In cases where the speedup is not perfect we can define overhead as the difference \begin{equation} T_o = pT_p-T1. \end{equation} We can also interpret this as the difference between simulating the parallel algorithm on a single processor, and the actual best sequential algorithm.

We will later see two different types of overhead:

- The parallel algorithm can be essentially different from the sequential one. For instance, sorting algorithms have a complexity $O(n\log n)$, but the parallel bitonic sort (section 8.6 ) has complexity $O(n\log^2n)$.

- The parallel algorithm can have overhead derived from the process or parallelizing, such as the cost of sending messages. As an example, section 6.2.3 analyzes the communication overhead in the matrix-vector product.

A parallel algorithm is called cost-optimal if the overhead is at most of the order of the running time of the sequential algorithm.

Exercise

The definition of overhead above implicitly assumes that overhead is

not parallelizable. Discuss this assumption in the context of the

two examples above.

End of exercise

2.2.2 Asymptotics

crumb trail: > parallel > Theoretical concepts > Asymptotics

If we ignore limitations such as that the number of processors has to be finite, or the physicalities of the interconnect between them, we can derive theoretical results on the limits of parallel computing. This section will give a brief introduction to such results, and discuss their connection to real life high performance computing.

Consider for instance the matrix-matrix multiplication $C=AB$, which takes $2N^3$ operations where $N$ is the matrix size. Since there are no dependencies between the operations for the elements of $C$, we can perform them all in parallel. If we had $N^2$ processors, we could assign each to an $(i,j)$ coordinate in $C$, and have it compute $c_{ij}$ in $2N$ time. Thus, this parallel operation has efficiency $1$, which is optimal.

Exercise Show that this algorithm ignores some serious issues about memory usage:

- If the matrix is kept in shared memory, how many simultaneous reads from each memory locations are performed?

- If the processors keep the input and output to the local computations in local storage, how much duplication is there of the matrix elements?

End of exercise

Adding $N$ numbers $\{x_i\}_{i=1\ldots N}$ can be performed in $\log_2 N$ time with $N/2$ processors. If we have $n/2$ processors we could compute:

- Define $s^{(0)}_i = x_i$.

- Iterate with $j=1,\ldots,\log_2 n$:

- Compute $n/2^j$ partial sums $s^{(j)}_i=s^{(j-1)}_{2i}+s^{(j-1)}_{2i+1}$

Exercise

Show that, with the scheme for parallel addition just outlined, you

can multiply two matrices in $\log_2 N$ time with $N^3/2$

processors. What is the resulting efficiency?

End of exercise

It is now a legitimate theoretical question to ask

- If we had infinitely many processors, what is the lowest possible time complexity for matrix-matrix multiplication, or

- Are there faster algorithms that still have $O(1)$ efficiency?

A first objection to these kinds of theoretical bounds is that they implicitly assume some form of shared memory. In fact, the formal model for these algorithms is called a PRAM , where the assumption is that every memory location is accessible to any processor.

Often an additional assumption is made that multiple simultaneous accesses to the same location are in fact possible. Since write and write accesses have a different behavior in practice, there is the concept of CREW-PRAM, for Concurrent Read, Exclusive Write PRAM.

The basic assumptions of the PRAM model are unrealistic in practice, especially in the context of scaling up the problem size and the number of processors. A further objection to the PRAM model is that even on a single processor it ignores the memory hierarchy; section 1.3 .

But even if we take distributed memory into account, theoretical results can still be unrealistic. The above summation algorithm can indeed work unchanged in distributed memory, except that we have to worry about the distance between active processors increasing as we iterate further. If the processors are connected by a linear array, the number of `hops' between active processors doubles, and with that, asymptotically, the computation time of the iteration. The total execution time then becomes $n/2$, a disappointing result given that we throw so many processors at the problem.

What if the processors are connected with a hypercube topology (section 2.7.5 )? It is not hard to see that the summation algorithm can then indeed work in $\log_2n$ time. However, as $n\rightarrow\infty$, can we physically construct a sequence of hypercubes of $n$ nodes and keep the communication time between two connected constant? Since communication time depends on latency, which partly depends on the length of the wires, we have to worry about the physical distance between nearest neighbors.

The crucial question here is whether the hypercube (an $n$-dimensional object) can be embedded in 3-dimensional space, while keeping the distance (measured in meters) constant between connected neighbors. It is easy to see that a 3-dimensional grid can be scaled up arbitrarily while maintaining a unit wire length, but the question is not clear for a hypercube. There, the length of the wires may have to increase as $n$ grows, which runs afoul of the finite speed of electrons.

We sketch a proof (see [Fisher:fastparallel] for more details) that, in our three dimensional world and with a finite speed of light, speedup is limited to $\sqrt[4]{n}$ for a problem on $n$ processors, no matter the interconnect. The argument goes as follows. Consider an operation that involves collecting a final result on one processor. Assume that each processor takes a unit volume of space, produces one result per unit time, and can send one data item per unit time. Then, in an amount of time $t$, at most the processors in a ball with radius $t$, that is, $O(t^3)$ processors total, can contribute to the final result; all others are too far away. In time $T$, then, the number of operations that can contribute to the final result is $\int_0^T t^3dt=O(T^4)$. This means that the maximum achievable speedup is the fourth root of the sequential time.

Finally, the question `what if we had infinitely many processors' is not realistic as such, but we will allow it in the sense that we will ask the weak scaling question (section 2.2.5 ) `what if we let the problem size and the number of processors grow proportional to each other'. This question is legitimate, since it corresponds to the very practical deliberation whether buying more processors will allow one to run larger problems, and if so, with what `bang for the buck'.

2.2.3 Amdahl's law

crumb trail: > parallel > Theoretical concepts > Amdahl's law

One reason for less than perfect speedup is that parts of a code can be inherently sequential. This limits the parallel efficiency as follows. Suppose that $5\%$ of a code is sequential, then the time for that part can not be reduced, no matter how many processors are available. Thus, the speedup on that code is limited to a factor Law} [amd:law] , which we will now formulate.

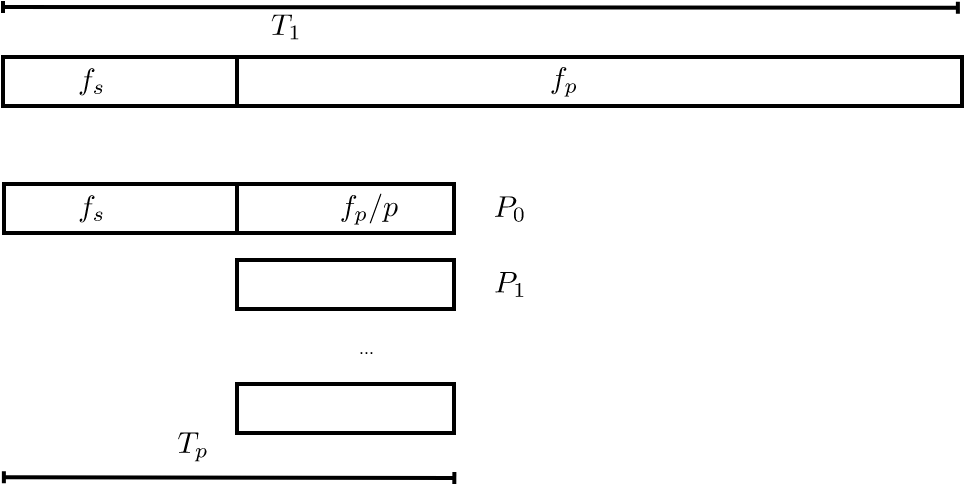

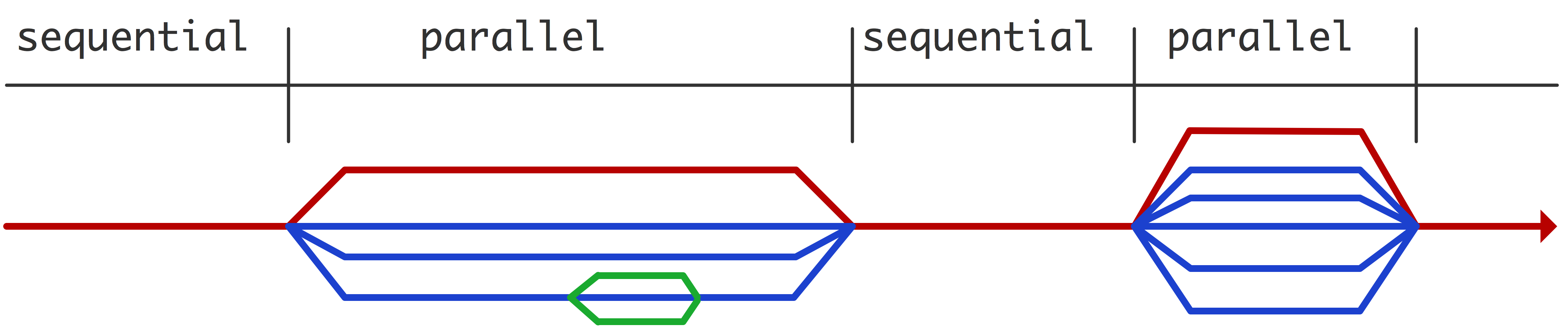

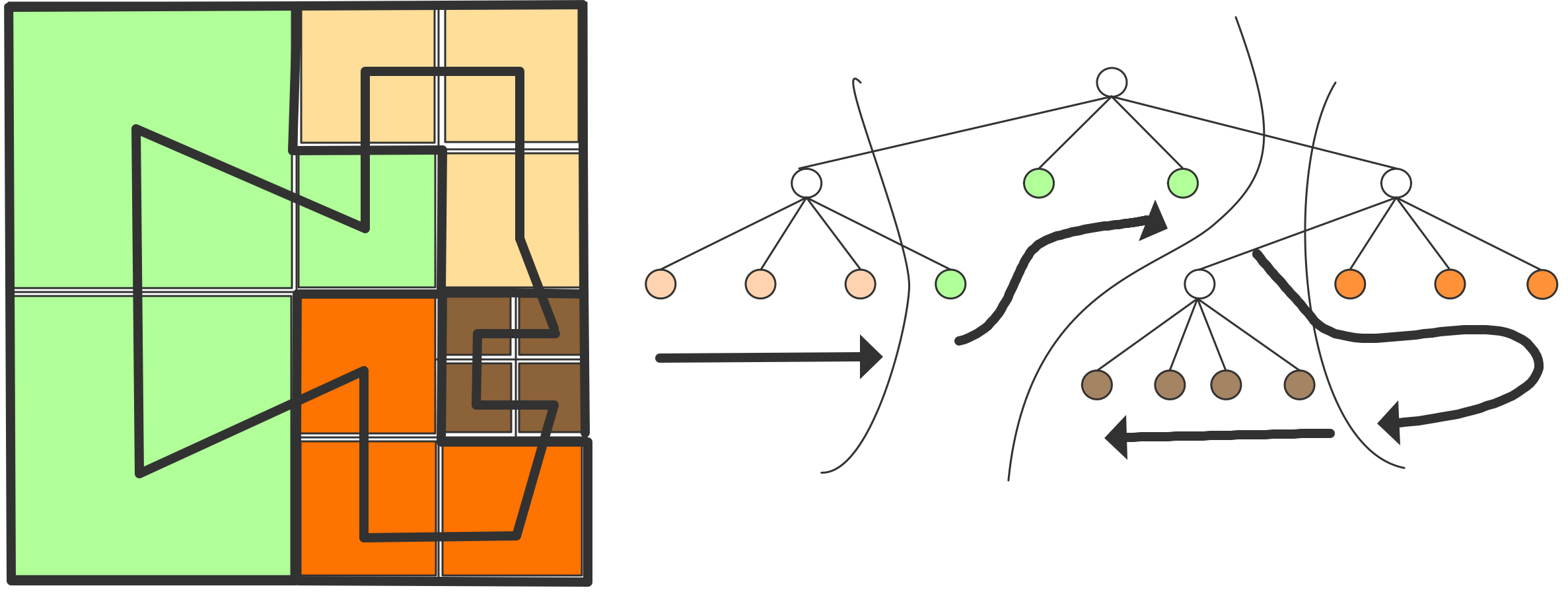

FIGURE 2.4: Sequential and parallel time in Amdahl's analysis.

Let $F_s$ be the sequential fraction and $F_p$ be the parallel fraction (or more strictly: the `parallelizable' fraction) of a code, respectively. Then $F_p+F_s=1$. The parallel execution time $T_p$ on $p$ processors is the sum of the part that is sequential $T_1F_s$ and the part that can be parallelized $T_1F_p/P$: \begin{equation} T_P=T_1(F_s+F_p/P). \label{eq:amdahl} \end{equation} (see figure 2.4 ) As the number of processors grows $P\rightarrow\infty$, the parallel execution time now approaches that of the sequential fraction of the code: $T_P\downarrow T_1F_s$. We conclude that speedup is limited by $S_P\leq 1/F_s$ and efficiency is a decreasing function $E\sim 1/P$.

The sequential fraction of a code can consist of things such as I/O operations. However, there are also parts of a code that in effect act as sequential. Consider a program that executes a single loop, where all iterations can be computed independently. Clearly, this code offers no obstacles to parallelization. However by splitting the loop in a number of parts, one per processor, each processor now has to deal with loop overhead: calculation of bounds, and the test for completion. This overhead is replicated as many times as there are processors. In effect, loop overhead acts as a sequential part of the code.

Exercise

Let's do a specific example. Assume that a code has a setup that

takes 1 second and a parallelizable section that takes 1000 seconds

on one processor. What are the speedup and efficiency if the code is

executed with 100 processors? What are they for 500 processors?

Express your answer to at most two significant digits.

End of exercise

Exercise

Investigate the implications of Amdahl's law: if the number of

processors $P$ increases, how does the parallel fraction of a code

have to increase to maintain a fixed efficiency?

End of exercise

2.2.3.1 Amdahl's law with communication overhead

crumb trail: > parallel > Theoretical concepts > Amdahl's law > Amdahl's law with communication overhead

In a way, Amdahl's law, sobering as it is, is even optimistic. Parallelizing a code will give a certain speedup, but it also introduces communication overhead that will lower the speedup attained. Let us refine our model of 2.4 \cite[p. 367]{Landau:comp-phys}): \begin{equation} T_p= T_1(F_s+F_p/P) +T_c, \end{equation} where $T_c$ is a fixed communication time.

To assess the influence of this communication overhead, we assume that the code is fully parallelizable, that is, $F_p=1$. We then find that \begin{equation} S_p=\frac{T_1}{T_1/p+T_c}. \label{eq:amdahl-comm} \end{equation} For this to be close to $p$, we need $T_c\ll T_1/p$ or $p\ll T_1/T_c$. In other words, the number of processors should not grow beyond the ratio of scalar execution time and communication overhead.

2.2.3.2 Gustafson's law

crumb trail: > parallel > Theoretical concepts > Amdahl's law > Gustafson's law

Amdahl's law was thought to show that large numbers of processors would never pay off. However, the implicit assumption in Amdahl's law is that there is a fixed computation which gets executed on more and more processors. In practice this is not the case: typically there is a way of scaling up a problem (in chapter Numerical treatment of differential equations you will learn the concept of `discretization'), and one tailors the size of the problem to the number of available processors.

A more realistic assumption would be to say that there is a sequential fraction independent of the problem size, and a parallel fraction that can be arbitrarily replicated. To formalize this, instead of starting with the execution time of the sequential program, let us start with the execution time of the parallel program, and say that \begin{equation} T_p=T(F_s+F_p) \qquad\hbox{with $F_s+F_p=1$}. \end{equation} Now we have two possible definitions of $T_1$. First of all, there is the $T_1$ you get from setting $p=1$ in $T_p$. (Convince yourself that that is actually the same as $T_p$.) However, what we need is $T_1$ describing the time to do all the operations of the parallel program.

FIGURE 2.5: Sequential and parallel time in Gustafson's analysis.

(See figure 2.5 .) This is: \begin{equation} T_1=F_sT+p\cdot F_pT. \end{equation} This gives us a speedup of \begin{equation} S_p=\frac{T_1}{T_p}=\frac{F_s+p\cdot F_p}{F_s+F_p} = F_s+p\cdot F_p = p-(p-1)\cdot F_s. \label{eq:gustaf-s} \end{equation}

From this formula we see that:

- Speedup is still bounded by $p$;

- \ldots but it's a positive number;

- for a given $p$ it's again a decreasing function of the sequential fraction.

Exercise

2.5

and $F_p$. What is the asymptotic behavior of the efficiency $E_p$?

End of exercise

As with Amdahl's law, we can investigate the behavior of Gustafson's law if we include communication overhead. Let's go back to 2.2.3.1 problem, and approximate it as \begin{equation} S_p = p(1-\frac{T_c}{T_1}p). \end{equation} Now, under the assumption of a problem that is gradually being scaled up, $T_c$ and $T_1$ become functions of $p$. We see that, if $T_1(p)\sim pT_c(p)$, we get linear speedup that is a constant fraction away from $1$. As a general discussion we can not take this analysis further; in section 6.2.3 you'll see a detailed analysis of an example.

2.2.3.3 Amdahl's law and hybrid programming

crumb trail: > parallel > Theoretical concepts > Amdahl's law > Amdahl's law and hybrid programming

Above, you learned about hybrid programming, a mix between distributed and shared memory programming. This leads to a new form of Amdahl's law.

Suppose we have $p$ nodes with $c$ cores each, and $F_p$ describes the fraction of the code that uses $c$-way thread parallelism. We assume that the whole code is fully parallel over the $p$ nodes. The ideal speed up would be $p c$, and the ideal parallel running time $T_1/(pc)$, but the actual running time is \begin{equation} T_{p,c} = T_1 \left(\frac {F_s}{p} + \frac{F_p}{p c}\right) = \frac{T_1}{pc}\left( F_sc+F_p\right) = \frac{T_1}{pc}\left( 1+ F_s(c-1)\right). \end{equation}

Exercise

Show that the speedup $T_1/T_{p,c}$ can be approximated by $p/F_s$.

End of exercise

In the original Amdahl's law, speedup was limited by the sequential portion to a fixed number $1/F_s$, in hybrid programming it is limited by the task parallel portion to $p/F_s$.

2.2.4 Critical path and Brent's theorem

crumb trail: > parallel > Theoretical concepts > Critical path and Brent's theorem

The above definitions of speedup and efficiency, and the discussion of Amdahl's law and Gustafson's law, made an implicit assumption that parallel work can be arbitrarily subdivided. As you saw in the summing example in section 2.1 , this may not always be the case: there can be dependencies between operations, meaning that one operation depends on an earlier in the sense of needing its result as input. Dependent operations can not be executed simultaneously, so they limit the amount of parallelism that can be employed.

We define the critical path as a (possibly non-unique) chain of dependencies of maximum length. (This length is sometimes known as span .) Since the tasks on a critical path need to be executed one after another, the length of the critical path is a lower bound on parallel execution time.

To make these notions precise, we define the following concepts:

Definition

\begin{equation}

\begin{array}{l@{\colon}l}

T_1&\hbox{the time the computation takes on a single processor}\\

T_p&\hbox{the time the computation takes with $p$ processors}\\

T_\infty&\hbox{the time the computation takes if unlimited processors are available}\\

P_\infty&\hbox{the value of $p$ for which $T_p=T_\infty$}

\end{array}

\end{equation}

End of definition

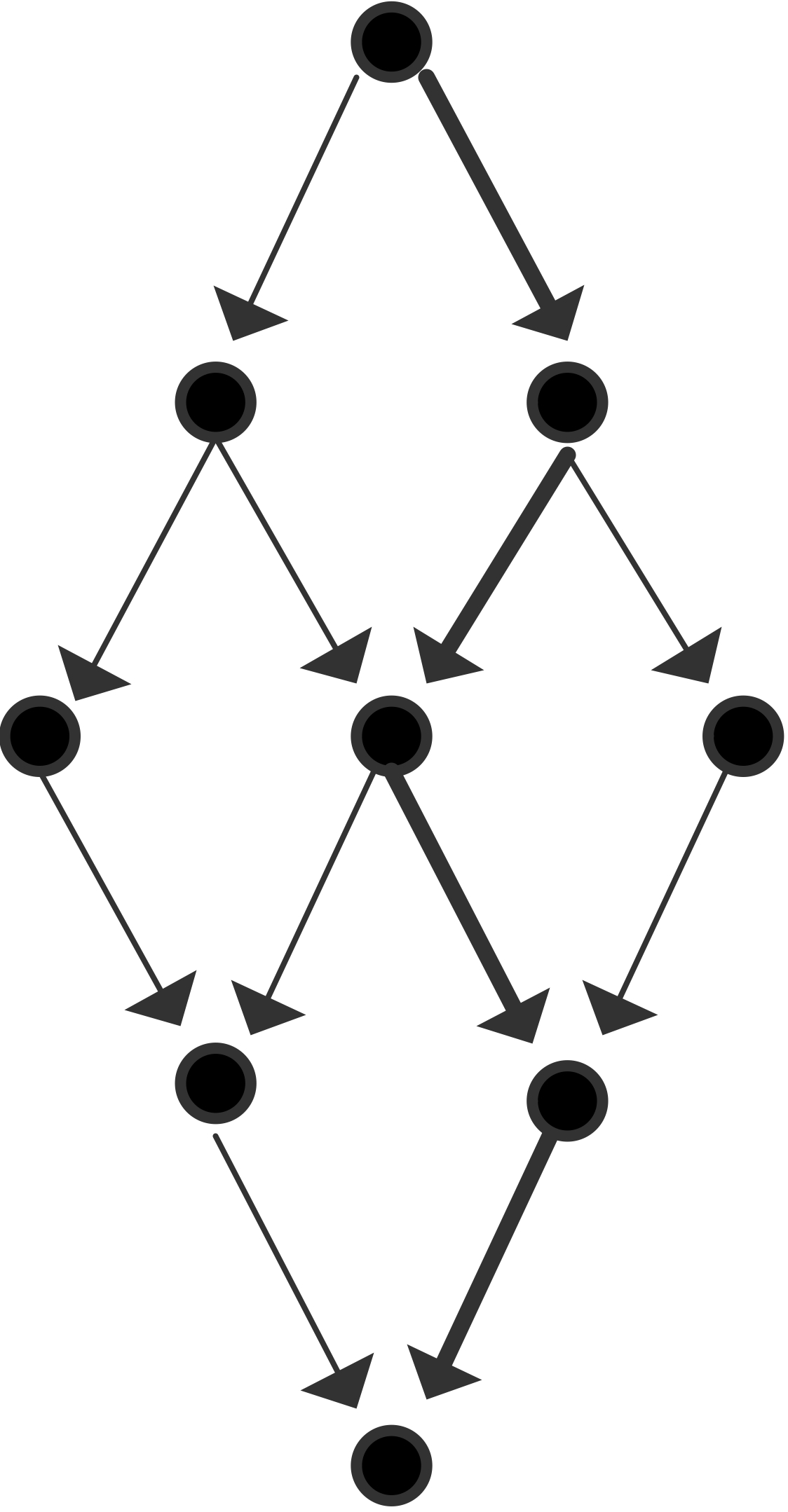

With these concepts, we can define the average parallelism of an algorithm as $T_1/T_\infty$, and the length of the critical path is $T_\infty$.

We will now give a few illustrations by showing a graph of tasks and their dependencies. We assume for simplicity that each node is a unit time task.

The maximum number of processors that can be used is 2 and the average parallelism is $4/3$: \begin{equation} \begin{array}{l} T_1=4,\quad T_\infty=3 \quad\Rightarrow T_1/T_\infty=4/3\\ T_2=3,\quad S_2=4/3,\quad E_2=2/3\\ P_\infty=2 \end{array} \end{equation}

The maximum number of processors that can be used is 3 and the average parallelism is $9/5$; efficiency is maximal for $p=2$: \begin{equation} \begin{array}{l} T_1=9,\quad T_\infty=5 \quad\Rightarrow T_1/T_\infty=9/5\\ T_2=6,\quad S_2=3/2,\quad E_2=3/4\\ T_3=5,\quad S_3=9/5,\quad E_3=3/5\\ P_\infty=3 \end{array} \end{equation}

The maximum number of processors that can be used is 4 and that is also the average parallelism; the figure illustrates a parallelization with $P=3$ that has efficiency $\equiv1$: \begin{equation} \begin{array}{l} T_1=12,\quad T_\infty=4 \quad\Rightarrow T_1/T_\infty=3\\ T_2=6,\quad S_2=2,\quad E_2=1\\ T_3=4,\quad S_3=3,\quad E_3=1\\ T_4=3,\quad S_4=4,\quad E_4=1\\ P_\infty=4 \end{array} \end{equation}

Based on these examples, you probably see that there are two extreme cases:

- If every task depends on precisely on other, you get a chain of dependencies, and $T_p=T_1$ for any $p$.

- On the other hand, if all tasks are independent (and $p$ divides their number) you get $T_p=T_1/p$ for any $p$.

- In a slightly less trivial scenario than the previous, consider the case where the critical path is of length $m$, and in each of these $m$ steps there are $p-1$ independent tasks, or at least: dependent only on tasks in the previous step. There will then be perfect parallelism in each of the $m$ steps, and we can express $T_p = T_1/p$ or $T_p= m+ (T_1-m)/p$.

That last statement actually holds in general. This is known as Brent's theorem :

Theorem

Let $m$ be the total number of tasks, $p$ the number of processors,

and $t$ the length of a

critical path

. Then

the computation can be done in

\begin{equation}

T_p \leq t +\frac{m-t}{p}.

\end{equation}

End of theorem

Proof

Divide the computation in steps, such that tasks in step $i+1$

are independent of each other, and only dependent on step $i$.

Let $s_i$ be the number of tasks in step $i$, then the time

for that step is $\lceil \frac{s_i}{p} \rceil$.

Summing over $i$ gives

\begin{equation}

T_p = \sum_i^t \lceil \frac{s_i}{p} \rceil

\leq \sum_i^t \frac{s_i+p-1}{p} = t + \sum_i^t \frac{s_i-1}{p} = t+\frac{m-t}{p}.

\end{equation}

End of proof

Exercise Consider a tree of depth $d$, that is, with $2^d-1$ nodes, and a search \begin{equation} \max_{n\in\mathrm{nodes}} f(n). \end{equation} Assume that all nodes need to be visited: we have no knowledge or any ordering on their values.

Analyze the parallel running time on $p$ processors, where

you may assume that $p=2^q$, with $q

End of exercise

Exercise Apply Brent's theorem to Gaussian elimination, assuming that add/multiply/division all take one unit time.

Describe the critical path and give the length. What is the resulting upper bound on the parallel runtime?

How many processors could you theoretically use?

What speedup and efficiency does that give?

End of exercise

2.2.5 Scalability

crumb trail: > parallel > Theoretical concepts > Scalability

Above, we remarked that splitting a given problem over more and more processors does not make sense: at a certain point there is just not enough work for each processor to operate efficiently. Instead, in practice users of a parallel code will either choose the number of processors to match the problem size, or they will solve a series of increasingly larger problems on correspondingly growing numbers of processors. In both cases it is hard to talk about speedup. Instead, the concept of scalability is used.

We distinguish two types of scalability. So-called strong scalability is in effect the same as speedup as discussed above. We say that a problem shows strong scalability if, partitioned over more and more processors, it shows perfect or near perfect speedup, that is, the execution time goes down linearly with the number of processors. In terms of efficiency we can describe this as: \begin{equation} \left. \begin{array}{l} N\equiv\mathrm{constant}\\ P\rightarrow\infty \end{array} \right\} \Rightarrow E_P\approx\mathrm{constant} \end{equation} Typically, one encounters statements like `this problem scales up to 500 processors', meaning that up to 500 processors the speedup will not noticeably decrease from optimal. It is not necessary for this problem to fit on a single processor: often a smaller number such as 64 processors is used as the baseline from which scalability is judged.

Exercise We can formulate strong scaling as a runtime that is inversely proportional to the number of processors: \begin{equation} t=c/p. \end{equation} Show that on a log-log plot, that is, you plot the logarithm of the runtime against the logarithm of the number of processors, you will get a straight line with slope $-1$.

Can you suggest a way of dealing with a non-parallelizable

section, that is, with a runtime $t=c_1+c_2/p$?

End of exercise

More interestingly, weak scalability describes the behavior of execution as problem size and number of processors both grow, but in such a way that the amount of work per processor stays constant. The term `work' here is ambiguous: sometimes weak scaling is interpreted as keeping the amount of data constant, in other cases it's the number of operations that stays constant.

Measures such as speedup are somewhat hard to report, since the relation between the number of operations and the amount of data can be complicated. If this relation is linear, one could state that the amount of data per processor is kept constant, and report that parallel execution time is constant as the number of processors grows. (Can you think of applications where the relation between work and data is linear? Where it is not?)

In terms of efficiency: \begin{equation} \left. \begin{array}{l} N\rightarrow\infty\\ P\rightarrow\infty\\ M=N/P\equiv\mathrm{constant} \end{array} \right\} \Rightarrow E_P\approx\mathrm{constant} \end{equation}

Exercise

Suppose you are investigating the weak scalability of a code.

After running it for a couple of sizes and corresponding numbers

of processes, you find that in each case the flop rate is roughly the same.

Argue that the code is indeed weakly scalable.

End of exercise

Exercise In the above discussion we always implicitly compared a sequential algorithm and the parallel form of that same algorithm. However, in section 2.2.1 we noted that sometimes speedup is defined as a comparison of a parallel algorithm with the \textbf{best} sequential algorithm for the same problem. With that in mind, compare a parallel sorting algorithm with runtime $(\log n)^2$ (for instance, bitonic sort ; section 8.6 ) to the best serial algorithm, which has a running time of $n\log n$.

Show that in the weak scaling case of $n=p$ speedup is $p/\log p$.

Show that in the strong scaling case speedup is a descending function of $n$.

End of exercise

Remark A historical anecdote.

Message: 1023110, 88 lines Posted: 5:34pm EST, Mon Nov 25/85, imported: .... Subject: Challenge from Alan Karp To: Numerical-Analysis, ... From GOLUB@SU-SCORE.ARPA I have just returned from the Second SIAM Conference on Parallel Processing for Scientific Computing in Norfolk, Virginia. There I heard about 1,000 processor systems, 4,000 processor systems, and even a proposed 1,000,000 processor system. Since I wonder if such systems are the best way to do general purpose, scientific computing, I am making the following offer. I will pay $100 to the first person to demonstrate a speedup of at least 200 on a general purpose, MIMD computer used for scientific computing. This offer will be withdrawn at 11:59 PM on 31 December 1995.

This was satisfied by scaling up the problem.

End of remark

2.2.5.1 Iso-efficiency

crumb trail: > parallel > Theoretical concepts > Scalability > Iso-efficiency

In the definition of weak scalability above, we stated that, under some relation between problem size $N$ and number of processors $P$, efficiency will stay constant. We can make this precise and define the iso-efficiency curve as the relation between $N,P$ that gives constant efficiency [Grama:1993:isoefficiency] .

2.2.5.2 Precisely what do you mean by scalable?

crumb trail: > parallel > Theoretical concepts > Scalability > Precisely what do you mean by scalable?

In scientific computing scalability is a property of an algorithm and the way it is parallelized on an architecture, in particular noting the way data is distributed.

In computer industry parlance the term `scalability' is sometimes applied to architectures or whole computer systems:

A scalable computer is a computer designed from a small number of basic components, without a single bottleneck component, so that the computer can be incrementally expanded over its designed scaling range, delivering linear incremental performance for a well-defined set of scalable applications. General-purpose scalable computers provide a wide range of processing, memory size, and I/O resources. Scalability is the degree to which performance increments of a scalable computer are linear'' [Bell:outlook] .

In particular,

- horizontal scaling is adding more hardware components, such as adding nodes to a cluster;

- vertical scaling corresponds to using more powerful hardware, for instance by doing a hardware upgrade.

2.2.6 Simulation scaling

crumb trail: > parallel > Theoretical concepts > Simulation scaling

In most discussions of weak scaling we assume that the amount of work and the amount of storage are linearly related. This is not always the case; for instance the operation complexity of a matrix-matrix product is $N^3$ for $N^2$ data. If you linearly increase the number of processors, and keep the data per process constant, the work may go up with a higher power.

A similar effect comes into play if you simulate time-dependent PDEs . (This uses concepts from chapter Numerical treatment of differential equations .) Here, the total work is a product of the work per time step and the number of time steps. These two numbers are related; in section 4.1.2 you will see that the time step has a certain minimum size as a function of the space discretization. Thus, the number of time steps will go up as the work per time step goes up.

Consider now applications such as weather prediction , and say that currently you need 4 hours of compute time to predict the next 24 hours of weather. The question is now: if you are happy with these numbers, and you buy a bigger computer to get a more accurate prediction, what can you say about the new computer?

In other words, rather than investigating scalability from the point of the running of an algorithm, in this section we will look at the case where the simulated time $S$ and the running time $T$ are constant. Since the new computer is presumably faster, we can do more operations in the same amount of running time. However, it is not clear what will happen to the amount of data involved, and hence the memory required. To analyze this we have to use some knowledge of the math of the applications.

Let $m$ be the memory per processor, and $P$ the number of processors, giving: \begin{equation} M=Pm\qquad\hbox{total memory.} \end{equation} If $d$ is the number of space dimensions of the problem, typically 2 or 3, we get \begin{equation} \Delta x = 1/M^{1/d}\qquad\hbox{grid spacing.} \end{equation} For stability this limits the time step $\Delta t$ to \begin{equation} \Delta t= \begin{cases} \Delta x=1\bigm/M^{1/d}&\hbox{hyperbolic case}\\ \Delta x^2=1\bigm/M^{2/d}&\hbox{parabolic case} \end{cases} \end{equation} (noting that the hyperbolic case was not discussed in chapter Numerical treatment of differential equations .) With a simulated time $S$, we find \begin{equation} k=S/\Delta t\qquad \hbox{time steps.} \end{equation} If we assume that the individual time steps are perfectly parallelizable, that is, we use explicit methods, or implicit methods with optimal solvers, we find a running time \begin{equation} T=kM/P=\frac{S}{\Delta t}m. \end{equation} Setting $T/S=C$, we find \begin{equation} m=C\Delta t, \end{equation} that is, the amount of memory per processor goes down as we increase the processor count. (What is the missing step in that last sentence?)

Further analyzing this result, we find \begin{equation} m=C\Delta t = c \begin{cases} 1\bigm/M^{1/d}&\hbox{hyperbolic case}\\ 1\bigm/M^{2/d}&\hbox{parabolic case} \end{cases} \end{equation} Substituting $M=Pm$, we find ultimately \begin{equation} m = C \begin{cases} 1\bigm/P^{1/(d+1)}&\hbox{hyperbolic}\\ 1\bigm/P^{2/(d+2)}&\hbox{parabolic} \end{cases} \end{equation} that is, the memory per processor that we can use goes down as a higher power of the number of processors.

Exercise Explore simulation scaling in the context of the Linpack benchmark , that is, Gaussian elimination. Ignore the system solving part and only consider the factorization part; assume that it can be perfectly parallelized.

- Suppose you have a single core machine, and your benchmark run takes time $T$ with $M$ words of memory. Now you buy a processor twice as fast, and you want to do a benchmark run that again takes time $T$. How much memory do you need?

- Now suppose you have a machine with $P$ processors, each with $M$ memory, and your benchmark run takes time $T$. You buy a machine with $2P$ processors, of the same clock speed and core count, and you want to do a benchmark run, again taking time $T$. How much memory does each node take?

End of exercise

2.2.7 Other scaling measures

crumb trail: > parallel > Theoretical concepts > Other scaling measures

Amdahl's law above was formulated in terms of the execution time on one processor. In many practical situations this is unrealistic, since the problems executed in parallel would be too large to fit on any single processor. Some formula manipulation gives us quantities that are to an extent equivalent, but that do not rely on this single-processor number [Moreland:formalmetrics2015] .

For starters, applying the definition $S_p(n) = \frac{ T_1(n) }{ T_p(n) }$ to strong scaling, we observe that $T_1(n)/n$ is the sequential time per operation, and its inverse $n/T_1(n)$ can be called the sequential computational rate , denoted $R_1(n)$. Similarly defining a `parallel computational rate' \begin{equation} R_p(n) = n/T_p(n) \end{equation} we find that \begin{equation} S_p(n) = R_p(n)/R_1(n) \end{equation} In strong scaling $R_1(n)$ will be a constant, so we make a logarithmic plot of speedup, purely based on measuring $T_p(n)$.

2.2.8 Concurrency; asynchronous and distributed computing

crumb trail: > parallel > Theoretical concepts > Concurrency; asynchronous and distributed computing

Even on computers that are not parallel there is a question of the execution of multiple simultaneous processes. Operating systems typically have a concept of time slicing , where all active process are given command of the CPU for a small slice of time in rotation. In this way, a sequential can emulate a parallel machine; of course, without the efficiency.

However, time slicing is useful even when not running a parallel application: OSs will have independent processes (your editor, something monitoring your incoming mail, et cetera) that all need to stay active and run more or less often. The difficulty with such independent processes arises from the fact that they sometimes need access to the same resources. The situation where two processes both need the same two resources, each getting hold of one, is called deadlock . A famous formalization of resource contention is known as the dining philosophers problem.

The field that studies such as independent processes is variously known as concurrency , asynchronous computing , or distributed computing . The term concurrency describes that we are dealing with tasks that are simultaneously active, with no temporal ordering between their actions. The term distributed computing derives from such applications as database systems, where multiple independent clients need to access a shared database.

We will not discuss this topic much in this book. Section 2.6.1 discusses the thread mechanism that supports time slicing; on modern multicore processors threads can be used to implement shared memory parallel computing.

The book `Communicating Sequential Processes' offers an analysis of the interaction between concurrent processes [Hoare:CSP] . Other authors use topology to analyze asynchronous computing [Herlihy:1999:topological] .

2.3 Parallel Computers Architectures

crumb trail: > parallel > Parallel Computers Architectures

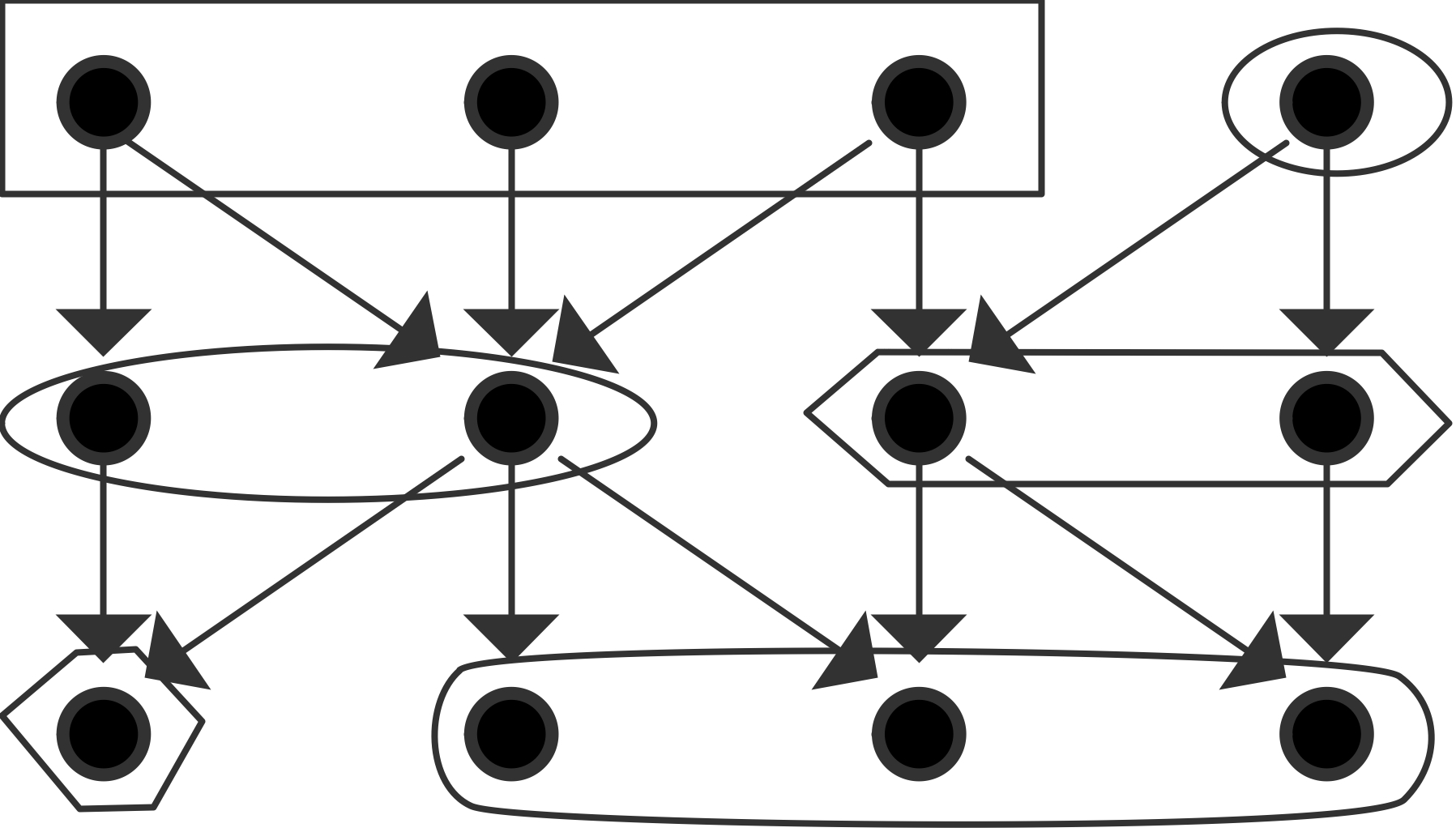

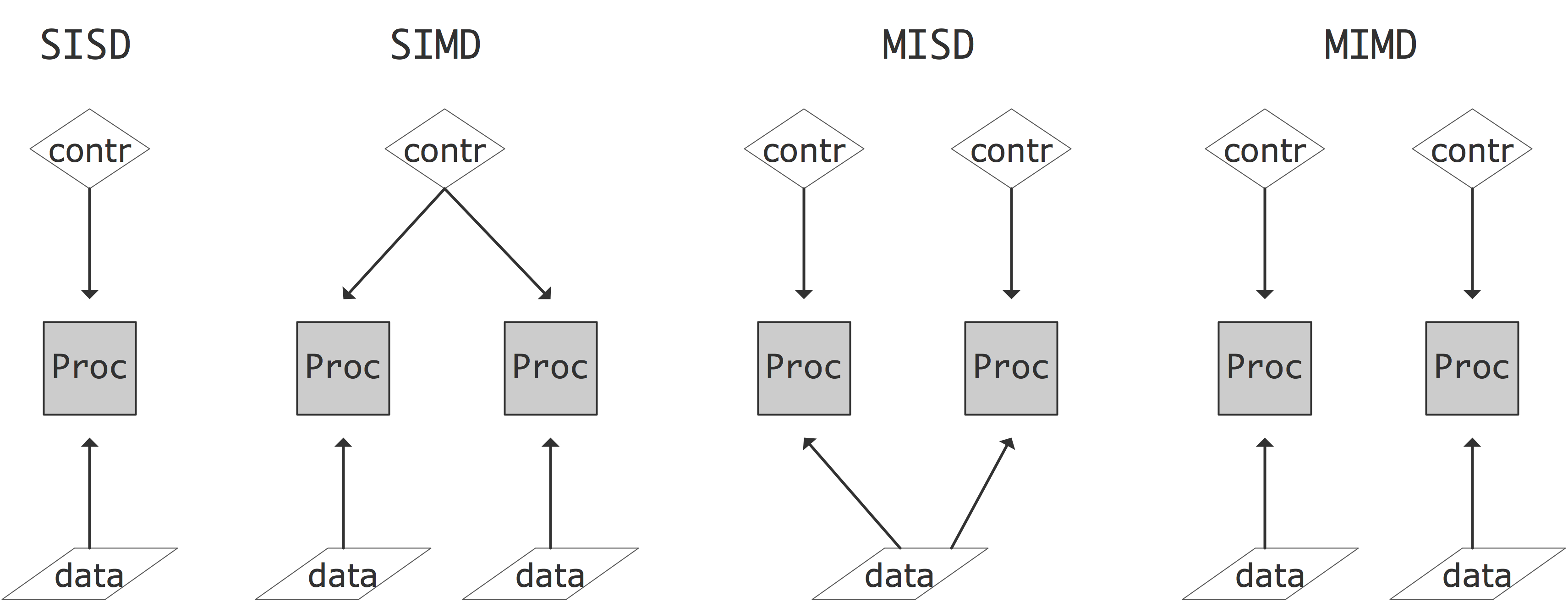

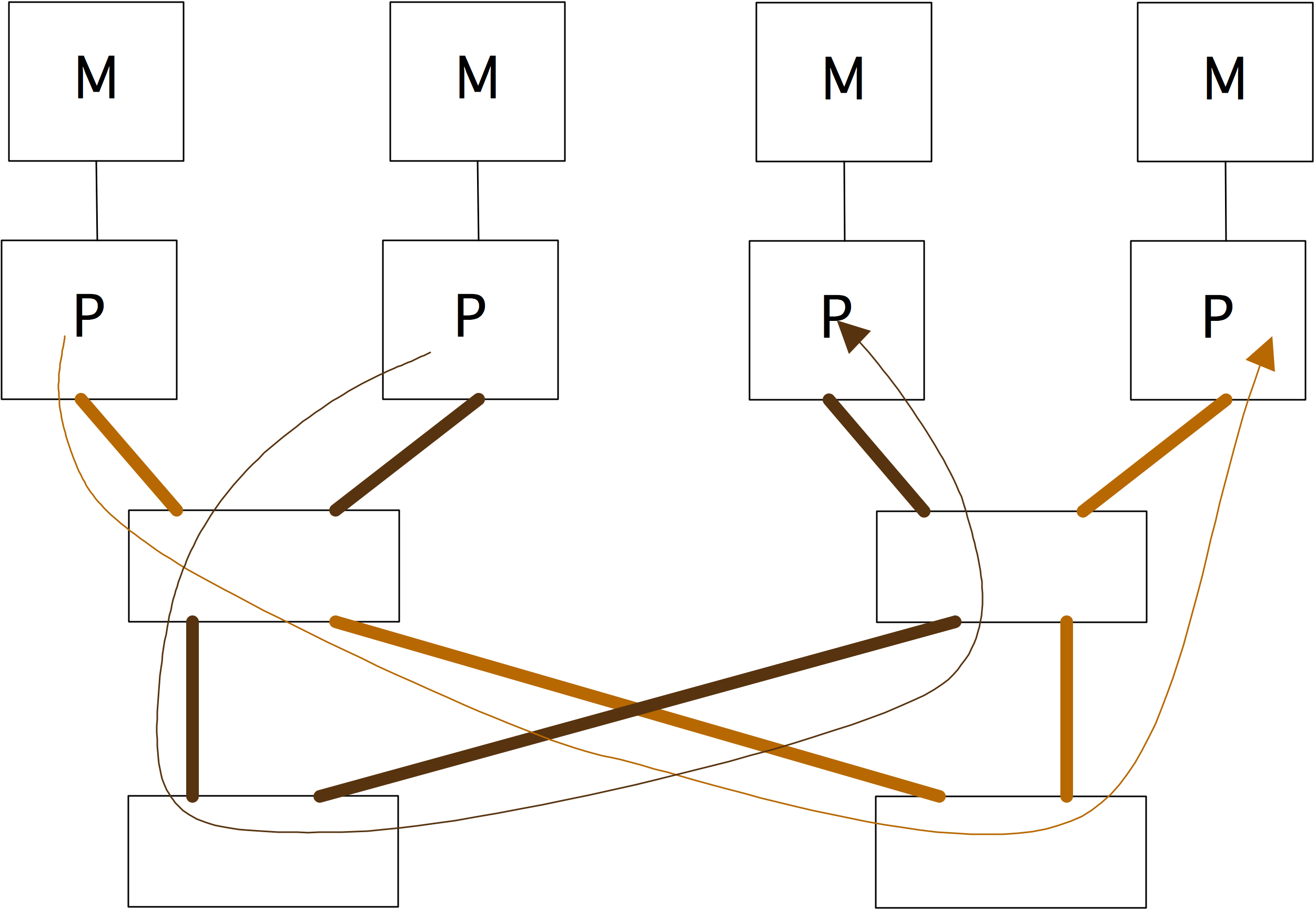

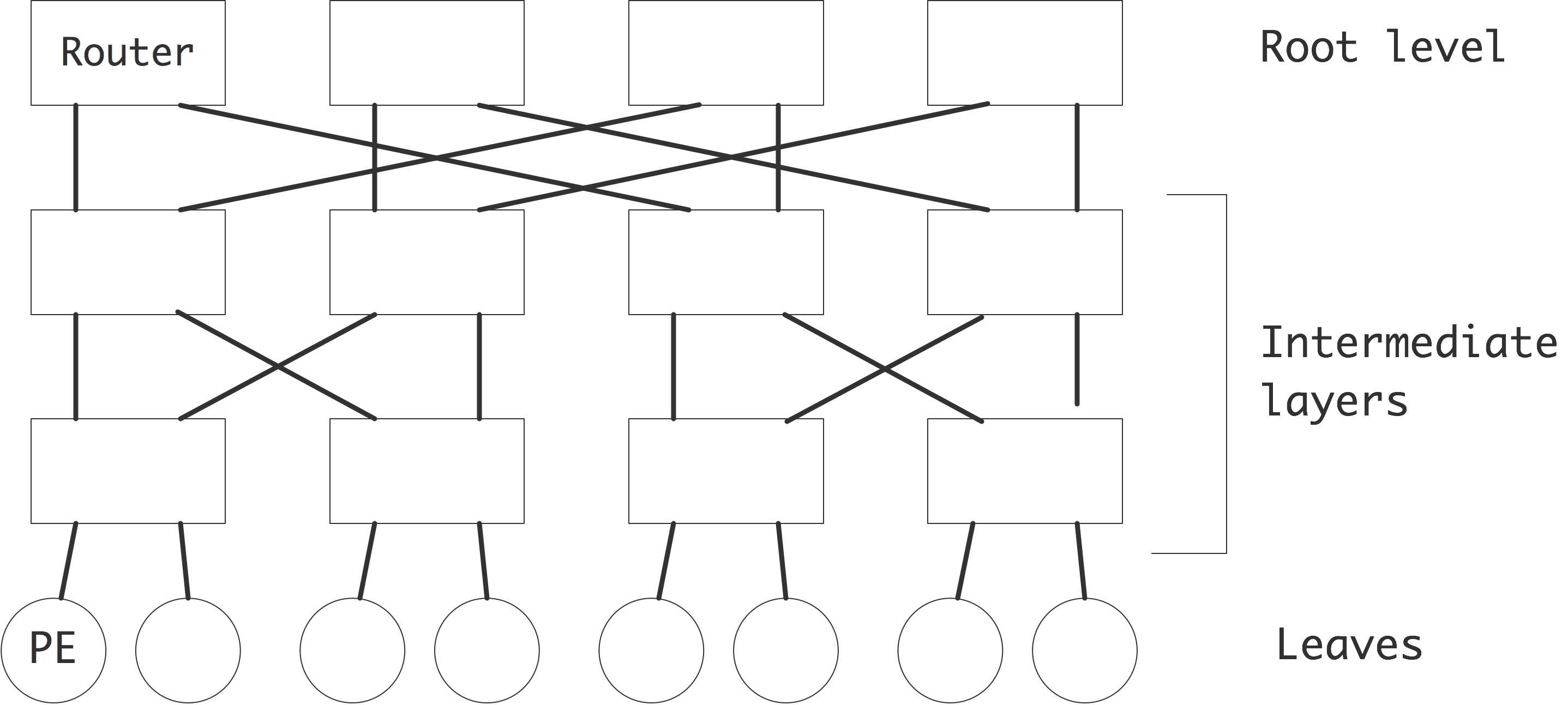

For quite a while now, the top computers have been some sort of parallel computer, that is, an architecture that allows the simultaneous execution of multiple instructions or instruction sequences. One way of characterizing the various forms this can take is due to Flynn [flynn:taxonomy] . Flynn's taxonomy characterizes architectures by whether the data flow and control flow are shared or independent. The following four types result (see also figure 2.6 ):

FIGURE 2.6: The four classes of the Flynn's taxonomy.

- [SISD] Single Instruction Single Data: this is the traditional CPU architecture: at any one time only a single instruction is executed, operating on a single data item.

- [SIMD] Single Instruction Multiple Data: in this computer type there can be multiple processors, each operating on its own data item, but they are all executing the same instruction on that data item. Vector computers (section 2.3.1.1 ) are typically also characterized as SIMD.

- [MISD] Multiple Instruction Single Data. No architectures answering to this description exist; one could argue that redundant computations for safety-critical applications are an example of MISD.

- [MIMD] Multiple Instruction Multiple Data: here multiple CPUs operate on multiple data items, each executing independent instructions. Most current parallel computers are of this type.

We will now discuss SIMD and MIMD architectures in more detail.

\newpage

2.3.1 SIMD

crumb trail: > parallel > Parallel Computers Architectures > SIMD

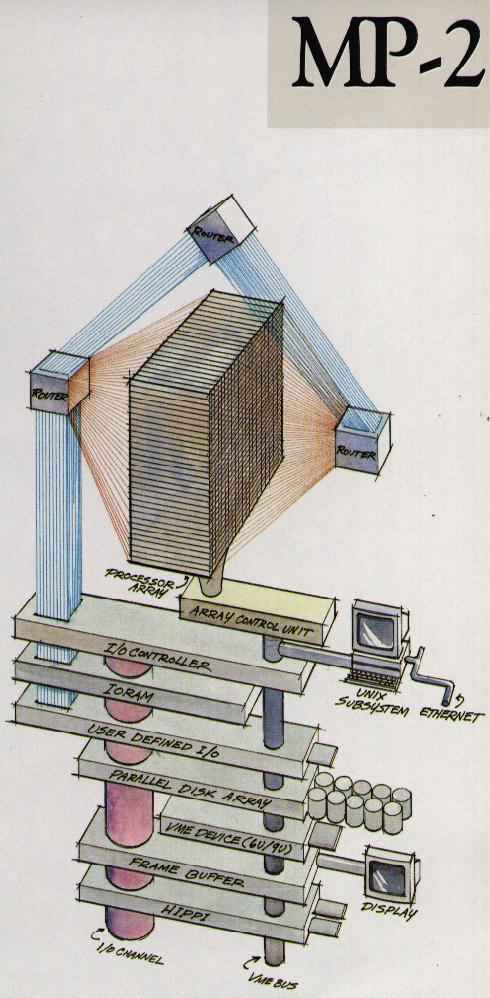

WRAPFIGURE 2.7: Architecture of the MasPar 2 array processor.

Parallel computers of the SIMD type apply the same operation simultaneously to a number of data items. The design of the CPUs of such a computer can be quite simple, since the arithmetic unit does not need separate logic and instruction decoding units: all CPUs execute the same operation in lock step. This makes SIMD computers excel at operations on arrays, such as

for (i=0; i<N; i++) a[i] = b[i]+c[i];and, for this reason, they are also often called \indexterm{array processors}. Scientific codes can often be written so that a large fraction of the time is spent in array operations.

On the other hand, there are operations that can not can be executed efficiently on an array processor. For instance, evaluating a number of terms of a recurrence $x_{i+1}=ax_i+b_i$ involves that many additions and multiplications, but they alternate, so only one operation of each type can be processed at any one time. There are no arrays of numbers here that are simultaneously the input of an addition or multiplication.

In order to allow for different instruction streams on different parts of the data, the processor would have a `mask bit' that could be set to prevent execution of instructions. In code, this typically looks like

where (x>0) {

x[i] = sqrt(x[i])

The programming model where identical operations are applied to a

number of data items simultaneously, is known as

data parallelism

.

Such array operations can occur in the context of physics simulations, but another important source is graphics applications. For this application, the processors in an array processor can be much weaker than the processor in a PC: often they are in fact bit processors, capable of operating on only a single bit at a time. Along these lines, ICL had the 4096 processor DAP [DAP:79a] in the 1980s, and Goodyear MPP [Batcher:85a] in the 1970s.

Later, the Connection Machine (CM-1, CM-2, CM-5) were quite popular. While the first Connection Machine had bit processors (16 to a chip), the later models had traditional processors capable of floating point arithmetic, and were not true SIMD architectures. All were based on a hyper-cube interconnection network; see section 2.7.5 . Another manufacturer that had a commercially successful array processor was MasPar ; figure 2.7 illustrates the architecture. You clearly see the single control unit for a square array of processors, plus a network for doing global operations.

Supercomputers based on array processing do not exist anymore, but the notion of SIMD lives on in various guises. For instance, GPUs are SIMD-based, enforced through their CUDA programming language. Also, the Intel Xeon Phi has a strong SIMD component. While early SIMD architectures were motivated by minimizing the number of transistors necessary, these modern co-processors are motivated by power efficiency considerations. Processing instructions (known as instruction issue ) is actually expensive compared to a floating point operation, in time, energy, and chip real estate needed. Using SIMD is then a way to economize on the last two measures.

2.3.1.1 Pipelining and pipeline processors

crumb trail: > parallel > Parallel Computers Architectures > SIMD > Pipelining and pipeline processors

A number of computers have been based on a \indexterm{vector processor} or pipeline processor design. The first commercially successful supercomputers, the Cray-1 and the Cyber-205 were of this type. In recent times, the Cray-X1 and the NEC SX series have featured vector pipes. The `Earth Simulator' computer [Sato2004] , which led the TOP500 (section 2.11.6 ) for 3 years, was based on NEC SX processors. The general idea behind pipelining was described in section 1.2.1.3 .

While supercomputers based on pipeline processors are in a distinct minority, pipelining is now mainstream in the superscalar CPUs that are the basis for clusters . A typical CPU has pipelined floating point units, often with separate units for addition and multiplication; see section 1.2.1.3 .

However, there are some important differences between pipelining in a modern superscalar CPU and in, more old-fashioned, vector units. The pipeline units in these vector computers are not integrated floating point units in the CPU, but can better be considered as attached vector units to a CPU that itself has a floating point unit. The vector unit has vector registers

(Note: {The Cyber205 was an exception, with direct-to-memory architecture.} )

with a typical length of 64 floating point numbers; there is typically no `vector cache'. The logic in vector units is also simpler, often addressable by explicit vector instructions. Superscalar CPUs, on the other hand, are fully integrated in the CPU and geared towards exploiting data streams in unstructured code.

2.3.1.2 True SIMD in CPUs and GPUs

crumb trail: > parallel > Parallel Computers Architectures > SIMD > True SIMD in CPUs and GPUs

True SIMD array processing can be found in modern CPUs and GPUs, in both cases inspired by the parallelism that is needed in graphics applications.

Modern CPUs from Intel and AMD, as well as PowerPC chips, have \indextermbusdef{vector}{instructions} that can perform multiple instances of an operation simultaneously. On Intel processors this is known as SSE or AVX . These extensions were originally intended for graphics processing, where often the same operation needs to be performed on a large number of pixels. Often, the data has to be a total of, say, 128 bits, and this can be divided into two 64-bit reals, four 32-bit reals, or a larger number of even smaller chunks such as 4 bits.

The AVX instructions are based on up to 512-bit wide SIMD, that is, eight double precision floating point numbers can be processed simultaneously. Just as single floating point operations operate on data in registers (section 1.3.3 ), vector operations use vector registers . The locations in a vector register are sometimes referred to as \indextermbusdef{SIMD}{lanes}.

The use of SIMD is mostly motivated by power considerations. Decoding instructions can be more power consuming than executing them, so SIMD parallelism is a way to save power.

Current compilers can generate SSE or AVX instructions automatically; sometimes it is also possible for the user to insert pragmas, for instance with the Intel compiler:

void func(float *restrict c, float *restrict a,

float *restrict b, int n)

{

#pragma vector always

for (int i=0; i<n; i++)

c[i] = a[i] * b[i];

}

Use of these extensions often requires data to be aligned with cache

line boundaries (section

1.3.4.7

), so there are special

allocate and free calls that return aligned memory.

Version 4 of OpenMP also has directives for indicating SIMD parallelism.

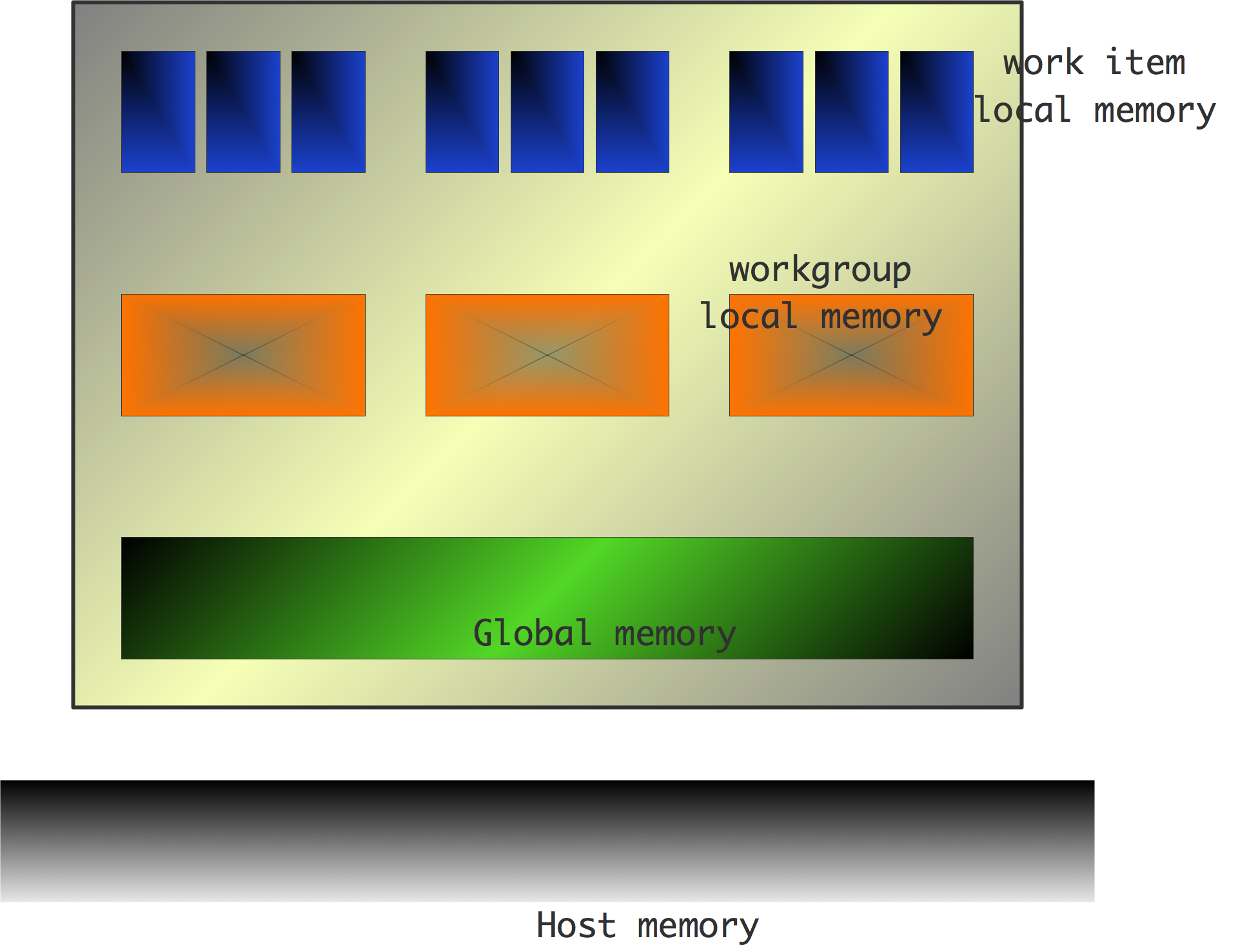

Array processing on a larger scale can be found in GPU s. A GPU contains a large number of simple processors, ordered in groups of 32, typically. Each processor group is limited to executing the same instruction. Thus, this is true example of SIMD processing. For further discussion, see section 2.9.3 .

2.3.2 MIMD / SPMD computers

crumb trail: > parallel > Parallel Computers Architectures > MIMD / SPMD computers

By far the most common parallel computer architecture these days is called MIMD : the processors execute multiple, possibly differing instructions, each on their own data. Saying that the instructions differ does not mean that the processors actually run different programs: most of these machines operate in SPMD mode, where the programmer starts up the same executable on the parallel processors. Since the different instances of the executable can take differing paths through conditional statements, or execute differing numbers of iterations of loops, they will in general not be completely in sync as they were on SIMD machines. If this lack of synchronization is due to processors working on different amounts of data, it is called load unbalance , and it is a major source of less than perfect speedup ; see section 2.10 .

There is a great variety in MIMD computers. Some of the aspects concern the way memory is organized, and the network that connects the processors. Apart from these hardware aspects, there are also differing ways of programming these machines. We will see all these aspects below. Many machines these days are called clusters . They can be built out of custom or commodity processors (if they consist of PCs, running Linux, and connected with Ethernet , they are referred to as \indextermsub{Beowulf} {clusters} [Gropp:BeowulfBook] ); since the processors are independent they are examples of the MIMD or SPMD model.

2.3.3 The commoditization of supercomputers

crumb trail: > parallel > Parallel Computers Architectures > The commoditization of supercomputers

In the 1980s and 1990s supercomputers were radically different from personal computer and mini or super-mini computers such as the DEC PDP and VAX series. The SIMD vector computers had one ( CDC Cyber205 or Cray-1 ), or at most a few ( ETA-10 , Cray-2 , Cray X/MP , Cray Y/MP ), extremely powerful processors, often a vector processor. Around the mid-1990s clusters with thousands of simpler (micro) processors started taking over from the machines with relative small numbers of vector pipes (see http://www.top500.org/lists/1994/11 ). At first these microprocessors ( IBM Power series , Intel i860 , MIPS , DEC Alpha ) were still much more powerful than `home computer' processors, but later this distinction also faded to an extent. Currently, many of the most powerful clusters are powered by essentially the same Intel Xeon and AMD Opteron chips that are available on the consumer market. Others use IBM Power Series or other `server' chips. See section 2.11.6 for illustrations of this history since 1993.

2.4 Different types of memory access

crumb trail: > parallel > Different types of memory access

In the introduction we defined a parallel computer as a setup where multiple processors work together on the same problem. In all but the simplest cases this means that these processors need access to a joint pool of data. In the previous chapter you saw how, even on a single processor, memory can have a hard time keeping up with processor demands. For parallel machines, where potentially several processors want to access the same memory location, this problem becomes even worse. We can characterize parallel machines by the approach they take to the problem of reconciling multiple accesses, by multiple processes, to a joint pool of data.

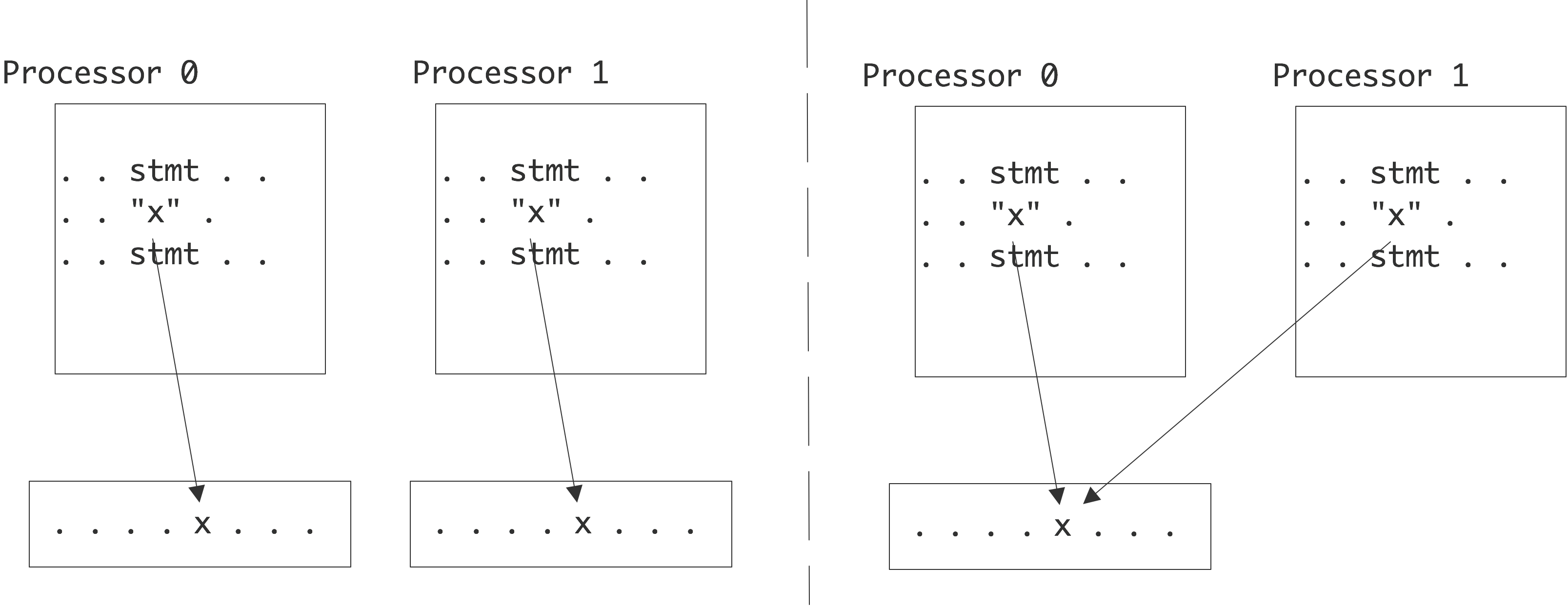

The main distinction here is between distributed memory and shared memory . With distributed memory, each processor has its own physical memory, and more importantly its own address space .

\caption{References to identically named variables in the

distributed and shared memory case.}

\caption{References to identically named variables in the

distributed and shared memory case.}

Thus, if two processors refer to a variable x , they access a variable in their own local memory. This is an instance of the SPMD model.

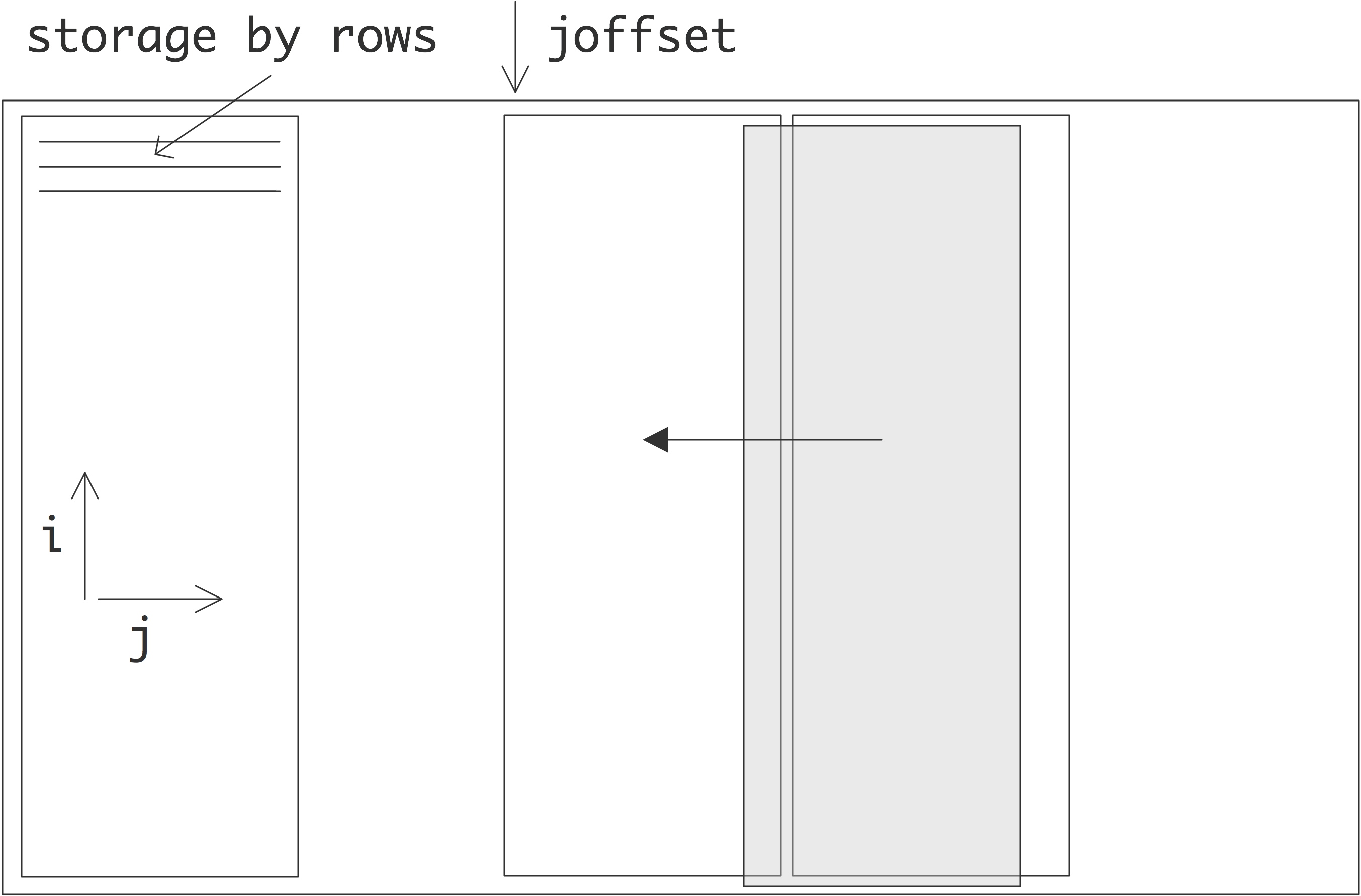

On the other hand, with shared memory, all processors access the same memory; we also say that they have a shared address space . See figure 2.4 .

2.4.1 Symmetric Multi-Processors: Uniform Memory Access

crumb trail: > parallel > Different types of memory access > Symmetric Multi-Processors: Uniform Memory Access

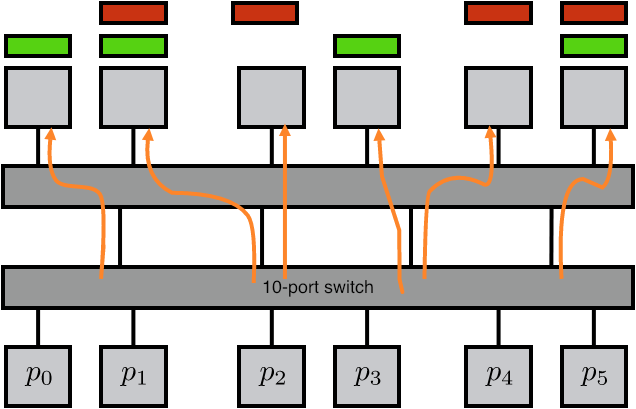

Parallel programming is fairly simple if any processor can access any memory location. For this reason, there is a strong incentive for manufacturers to make architectures where processors see no difference between one memory location and another: any memory location is accessible to every processor, and the access times do not differ. This is called UMA , and the programming model for architectures on this principle is often called SMP .

There are a few ways to realize an SMP architecture. Current desktop computers can have a few processors accessing a shared memory through a single memory bus; for instance Apple markets a model with 2 six-core processors. Having a memory bus that is shared between processors works only for small numbers of processors; for larger numbers one can use a crossbar that connects multiple processors to multiple memory banks; see section 2.7.6 . 2.21 shows 2.7.6.2 show butterfly exchange , which is built up out of simple

On multicore processors there is uniform memory access of a different type: the cores typically have a shared cache , typically the L3 or L2 cache.

2.4.2 Non-Uniform Memory Access

crumb trail: > parallel > Different types of memory access > Non-Uniform Memory Access

The UMA approach based on shared memory is obviously limited to a small number of processors. The crossbar networks are expandable, so they would seem the best choice. However, in practice one puts processors with a local memory in a configuration with an exchange network. This leads to a situation where a processor can access its own memory fast, and other processors' memory slower. This is one case of so-called NUMA : a strategy that uses physically distributed memory, abandoning the uniform access time, but maintaining the logically shared address space: each processor can still access any memory location.

2.4.2.1 Affinity

crumb trail: > parallel > Different types of memory access > Non-Uniform Memory Access > Affinity

When we have NUMA , the question of where to place data, in relation to the process or thread that will access it, becomes important. This is known as affinity ; if we look at it from the point of view of placing the processes or threads, it is called process affinity .

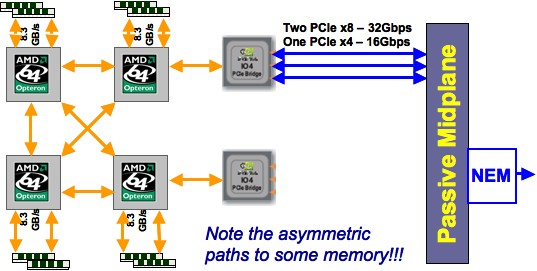

QUOTE 2.8: Non-uniform memory access in a four-socket motherboard.

Figure 2.8 illustrates NUMA in the case of the four-socket motherboard of the TACC Ranger cluster . Each chip has its own memory (8Gb) but the motherboard acts as if the processors have access to a shared pool of 32Gb. Obviously, accessing the memory of another processor is slower than accessing local memory. In addition, note that each processor has three connections that could be used to access other memory, but the rightmost two chips use one connection to connect to the network. This means that accessing each other's memory can only happen through an intermediate processor, slowing down the transfer, and tying up that processor's connections.

2.4.2.2 Coherence

crumb trail: > parallel > Different types of memory access > Non-Uniform Memory Access > Coherence

While the NUMA approach is convenient for the programmer, it offers some challenges for the system. Imagine that two different processors each have a copy of a memory location in their local (cache) memory. If one processor alters the content of this location, this change has to be propagated to the other processors. If both processors try to alter the content of the one memory location, the behavior of the program can become undetermined.

Keeping copies of a memory location synchronized is known as cache coherence (see section 1.4.1 for further details); a multi-processor system using it is sometimes called a `cache-coherent NUMA' or ccNUMA architecture.

Taking NUMA to its extreme, it is possible to have a software layer that makes network-connected processors appear to operate on shared memory. This is known as distributed shared memory or virtual shared memory . In this approach a hypervisor offers a shared memory API, by translating system calls to distributed memory management. This shared memory API can be utilized by the Linux kernel , which can support 4096 threads.

Among current vendors only SGI (the UV line) and Cray (the XE6 ) market products with large scale NUMA. Both offer strong support for PGAS languages; see section 2.6.5 . There are vendors, such as ScaleMP , that offer a software solution to distributed shared memory on regular clusters.

2.4.3 Logically and physically distributed memory

crumb trail: > parallel > Different types of memory access > Logically and physically distributed memory

The most extreme solution to the memory access problem is to offer memory that is not just physically, but that is also logically distributed: the processors have their own address space, and can not directly see another processor's memory. This approach is often called `distributed memory', but this term is unfortunate, since we really have to consider the questions separately whether memory is distributed and whether is appears distributed. Note that NUMA also has physically distributed memory; the distributed nature of it is just not apparent to the programmer.

With logically and physically distributed memory, the only way one processor can exchange information with another is through passing information explicitly through the network. You will see more about this in section 2.6.3.3 .

This type of architecture has the significant advantage that it can scale up to large numbers of processors: the IBM BlueGene has been built with over 200,000 processors. On the other hand, this is also the hardest kind of parallel system to program.

Various kinds of hybrids between the above types exist. In fact, most modern clusters will have NUMA nodes, but a distributed memory network between nodes.

2.5 Granularity of parallelism

crumb trail: > parallel > Granularity of parallelism

In this section we look at parallelism from a point of how far to subdivide the work over processing elements. The concept we explore here is that of granularity : the balance between the amount of independent work per processing element, and how often processing elements need to synchronize. We talk of `large grain parallelism' if there is a lot of work in between synchronization points, and `small grain parallelism' if that amount of work is small. Obviously, in order for small grain parallelism to be profitable, the synchronization needs to be fast; with large grain parallelism we can tolerate most costly synchronization.

The discussion in this section will be mostly on a conceptual level; in section 2.6 we will go into some detail on actual parallel programming.

2.5.1 Data parallelism

crumb trail: > parallel > Granularity of parallelism > Data parallelism

It is fairly common for a program to have loops with a simple body that gets executed for all elements in a large data set:

for (i=0; i<1000000; i++) a[i] = 2*b[i];Such code is considered an instance of data parallelism or fine-grained parallelism . If you had as many processors as array elements, this code would look very simple: each processor would execute the statement

a = 2*bon its local data.

If your code consists predominantly of such loops over arrays, it can be executed efficiently with all processors in lockstep. Architectures based on this idea, where the processors can in fact only work in lockstep, have existed, see section 2.3.1 . Such fully parallel operations on arrays appear in computer graphics, where every pixel of an image is processed independently. For this reason, GPUs (section 2.9.3 ) are strongly based on data parallelism.

The CUDA language, invented by NVidia , allows for elegant expression of data parallelism. Later developed languages, such as Sycl , or libraries such as Kokkos , aim at similar expression, but are more geared towards heterogeneous parallelism.

Continuing the above example for a little bit, consider the operation

\begin{displayalgorithm}

\For{$0\leq i<\mathrm{max}$}{

$i_{

mathrm{left}}=

mod(i-1,

mathrm{max})$

$i_{

mathrm{right}}=

mod(i+1,

mathrm{max})$

$a_i = (b_{i_{\mathrm{left}}}+b_{i_{\mathrm{right}}})/2$}

\end{displayalgorithm}

On a data parallel machine, that could be implemented as

\begin{displayalgorithm}

\SetKw{shiftleft}{shiftleft}

\SetKw{shiftright}{shiftright}

$

bleft

\leftarrow \shiftright(

b

)$

$

bright

\leftarrow \shiftleft(

b

)$

$

a

\leftarrow (

bleft

+

bright

)/2$

\end{displayalgorithm}

where the shiftleft/right instructions cause a data item to be sent to the processor with a number lower or higher by 1. For this second example to be efficient, it is necessary that each processor can communicate quickly with its immediate neighbors, and the first and last processor with each other.

In various contexts such a `blur' operations in graphics, it makes sense to have operations on 2D data:

\begin{displayalgorithm}

\For{$0

and consequently processors have be able to move data to neighbors in

a 2D grid.

crumb trail: > parallel > Granularity of parallelism > Instruction-level parallelism

In

ILP

, the parallelism is still on the level of individual

instructions, but these need not be similar. For instance, in

\begin{displayalgorithm}

$a

the two assignments are independent, and can therefore be executed

simultaneously. This kind of parallelism is too cumbersome for humans

to identify, but compilers are very good at this. In

fact, identifying

ILP

is crucial for getting good performance out

of modern

superscalar

CPUs.

crumb trail: > parallel > Granularity of parallelism > Task-level parallelism

At the other extreme from data and instruction-level parallelism,

task parallelism

that can be executed in parallel.

As an example, a

search in a tree

data structure could be implemented as follows:

{SearchInTree}{root}

\SetKw{optimal}{optimal}\SetKw{exit}{exit}\SetKw{search}{SearchInTree}\SetKw{parl}{parallel}

\eIf{\optimal(root)}{\exit}

{\parl: \search(leftchild),\search(rightchild)}

The search tasks in this example are not synchronized, and the number

of tasks is not fixed: it can grow arbitrarily. In practice, having

too many tasks is not a good idea, since processors are most efficient

if they work on just a single task. Tasks can then be scheduled as

follows:

\begin{displayalgorithm}

\While{there are tasks left}{

wait until a processor becomes inactive;

(There is a subtle distinction between the two previous

pseudo-codes. In the first, tasks were self-scheduling: each task

spawned off two new ones. The second code is an example of

the

manager-worker paradigm

: there is one central task which

lives for the duration of the code, and which spawns and assigns the

worker tasks.)

Unlike in the data parallel example above, the assignment of data to

processor is not determined in advance in such a scheme. Therefore,

this mode of parallelism is most suited for thread-programming, for

instance through the OpenMP library; section

2.6.2

.

Let us consider a more serious example of task-level parallelism.

A finite element mesh is, in the simplest case, a collection of

triangles that covers a 2D object. Since angles that are too acute

should be avoided, the

Delauney mesh refinement

process

can take certain triangles, and replace them by better shaped

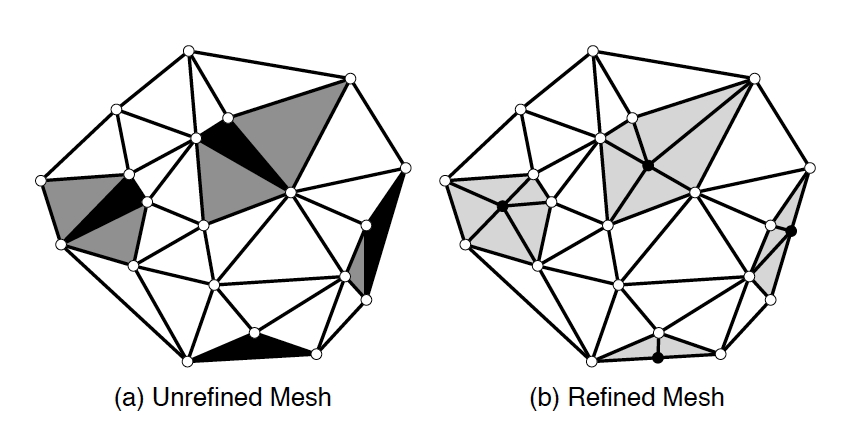

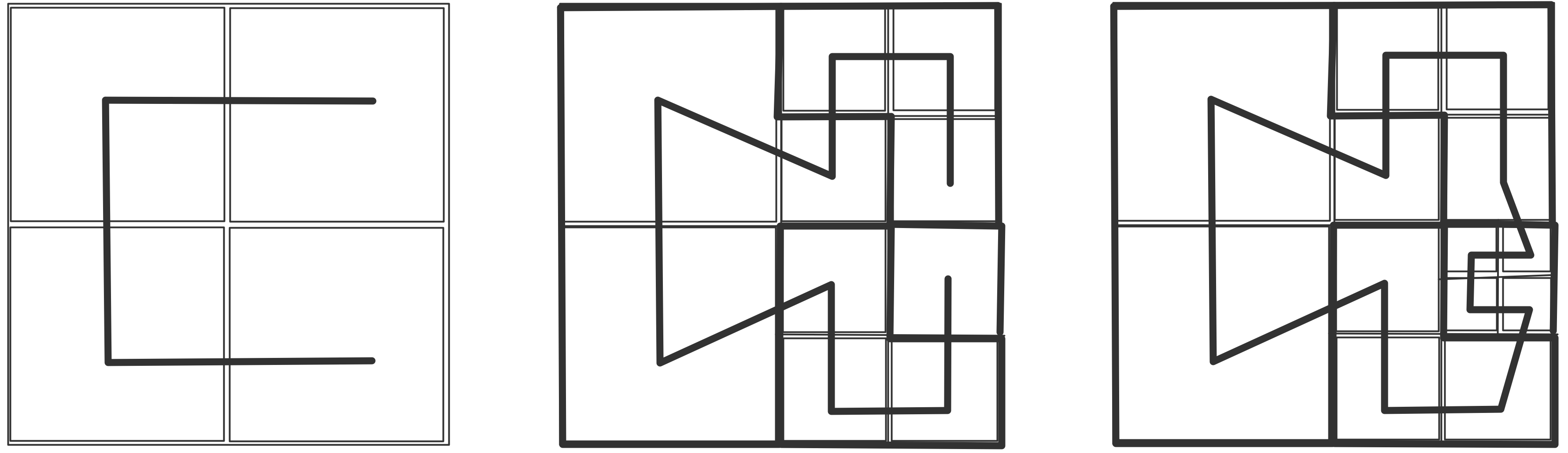

ones. This is illustrated in figure

2.9

: the black

triangles violate some angle condition, so either they themselves get

subdivided, or they are joined with some neighboring ones (rendered

in grey) and then jointly redivided.

FIGURE 2.9: A mesh before and after refinement.2.5.2 Instruction-level parallelism

leftarrow b+c$

$d

leftarrow e*f$

\end{displayalgorithm}

2.5.3 Task-level parallelism

spawn a new task on it}

\end{displayalgorithm}

In pseudo-code, this can be implemented as in figure 2.10 .

\begin{displayalgorithm}

Mesh m = /* read in initial mesh */

WorkList wl;

wl.add(mesh.badTriangles());

\While {

(wl.size() != 0)

} {

Element e = wl.get(); //get bad triangle

if (e no longer in mesh) continue;

Cavity c = new Cavity(e);

c.expand();

c.retriangulate();

mesh.update(c);

wl.add(c.badTriangles());

}

\end{displayalgorithm}

FIGURE 2.10: Task queue implementation of Delauney refinement.

(This figure and code are to be found in [Kulkami:howmuch] , which also contains a more detailed discussion.)

It is clear that this algorithm is driven by a worklist (or task queue ) data structure that has to be shared between all processes. Together with the dynamic assignment of data to processes, this implies that this type of irregular parallelism is suited to shared memory programming, and is much harder to do with distributed memory.

2.5.4 Conveniently parallel computing

crumb trail: > parallel > Granularity of parallelism > Conveniently parallel computing

In certain contexts, a simple, often single processor, calculation needs to be performed on many different inputs. Since the computations have no data dependencies and need not be done in any particular sequence, this is often called embarrassingly parallel or \indexterm{conveniently parallel} computing. This sort of parallelism can happen at several levels. In examples such as calculation of the Mandelbrot set or evaluating moves in a chess game, a subroutine-level computation is invoked for many parameter values. On a coarser level it can be the case that a simple program needs to be run for many inputs. In this case, the overall calculation is referred to as a parameter sweep .

2.5.5 Medium-grain data parallelism